As we close out another semester, the Social Identity and Morality Lab is also coming to an end with a brand new name and look for 2025. We are pleased to announce that, starting in January, we are now known as the Center for Conflict and Cooperation. We will be sharing a number of big announcements about the Center here. In the meantime, enjoy this brief summary of our research from the last year.

January: SOCIAL MEDIA & MORALITY

The attention economy of social media can lead people to create and express exaggerated beliefs and carefully curated content designed to capture attention rather than reflect reality or benefit humanity. In January, we published our review on the connection between social media and morality. How does the online environment shape human psychology? One means by which people and groups can capture attention and drive engagement is by sharing morally and emotionally evocative content. For instance, we have found that people will pay greater attention to moral or emotional words as a result of evolutionary pressures that rewarded the ability to navigate social situations—and this is linked to what we share on social media. If moral outrage is a fire, is the Internet like gasoline, accelerating and exaggerating existing moral dynamics? Our paper describes the psychological function and appeal of morality, and how these instincts are harnessed and monetized online. We also review the impact of moral psychology on a broad array of individual and societal issues related to social media.

February: A GLOBAL CLIMATE CHANGE INTERVENTION TOURNAMENT

We covered one of our largest mega-studies to date: a global tournament testing different interventions to motivate climate action across the globe. We compared 11 interventions across the same outcome variables in one large experiment, to effectively determine how each one stacks up against the other in a controlled test. Overall, the different interventions had small but significant effects globally. But the impact of the interventions varied widely based on the outcomes that were targeted and different characteristics of the audience. Sadly, no interventions increased effortful behavior towards fighting climate change via the tree planting task (only on the self-report measures). These results reveal that no intervention offers silver bullet solution when it comes to climate change. As such, scholars need to be far more modest in their claims about these messages and practitioners need to be careful about what interventions they want to use to achieve their desired goals.

March: CAREER ADVICE FOR SCIENTISTS

In March, we did a recap of all the columns Jay helped write for Science Magazine’s Letters to Young Scientists. We cover advice for scientists at all stages of their career, from top 10 tips to help you succeed in your PhD application, to how to ace faculty job talks, to essential things to know as you kickstart your own lab. But there are also helpful columns for people who aren’t in science, like how to resolve conflict, write more effectively, or give a great presentation. Check out the full list in the original newsletter! These were originally read by nearly half a million people in Science and now you can see them summarized in one place and sorted by career stage. H

April: A MISINFORMATION INTERVENTION FOR POLARIZED CONTENT

Misinformation is a serious problem on nearly all social media sites. We discussed our paper on a new intervention designed to reduce the spread of misinformation on social media: the misleading count. Specifically, we developed an intervention that works well against polarized content, which is much more resistant to fact checks and accuracy nudges. Specifically, we developed a “Misleading” count added to social media posts showing how many in-group members of a user’s political party marked a social media post as misinformation on each social media post. The number of people who reported they would be likely to share misleading social media posts dropped by 25% in response to the Misleading count showing in-group judgements, compared with 5% in response to an accuracy nudge. These results provide initial evidence that identity-based interventions may be more effective than neutral ones for addressing partisan-based misinformation.

May: OVERCOMING PARTISAN DIVIDES IN CLIMATE CHANGE

A phenomenon known as the “green gap” reflects the disparity between what people believe about climate change and their actual climate actions. In May, we reviewed our paper about the political divide in climate change beliefs and interventions. Our study examined whether the political polarization in climate change beliefs manifests as polarization regarding climate actions, such as investing effort and time to generate tree-planting donations. Overall, most people around the world believe in human caused climate change and support a wide variety of policies to reduce it (exceeding 75% of our total sample). We observe worldwide polarization in climate change beliefs and policy support, with liberals generally showing higher levels of both compared to conservatives. However, this polarization does not extend to the amount of effort and time invested in a climate-relevant task—liberals and conservatives are equally likely to engage in the tree-planting donation task.

June: THE IMPACT OF GENERATIVE AI ON SOCIETY

We summarized our new paper on the five impacts of generative AI on society. In the article, we examine the paradox of GenAI: its potential to exacerbate socioeconomic inequalities while simultaneously unlocking opportunities for shared prosperity. For example, generative AI could increase the spread of misinformation, but also reduce it; decrease inequalities in the workplace, but also increase them; personalize teaching, but also increase the gender gap in education; help clinicians make better choices, but also lead to mistakes; and dictate either equitable policy making or run unchecked with implications for decades to come. It is an exciting, yet daunting moment to be alive, charged with heavy responsibilities. Each of us—whether as researchers, policy makers, or citizens—will play a crucial role as an architect of the future and can contribute to driving the course towards the positive use of what could be one of humanity’s greatest innovation, or its worst.

July: MORALITY IN THE ANTHROPOCENE

Just as the atomic bomb drastically changed modern warfare, the internet has made a profound impact on moral psychology. In July, we discussed our theoretical paper about morality in the modern age. Humans have an innate tendency to care about moral issues like fairness, empathy, and reciprocity, all traits that were evolutionarily adaptive for small, closely knit groups to survive. However, the internet connects over 5 billion people together in a brand new, technological environment that has fundamentally altered our social world. We argue that the moral instincts humans developed in small social networks are poorly suited for this large, interconnected environment due to the scale of the internet and the distance between people on the internet. Understanding the challenges therein is crucial for navigating and shaping the online environment to prevent maladaptive outcomes. While the internet offers many benefits and has been used as a tool for collective organizing and platforming disenfranchised voices, its structure and incentives currently exploit our moral instincts, leading to negative consequences that should be addressed through thoughtful design and regulation.

August: INTERGROUP MORAL HYPOCRISY

We talked about our replication of a famous moral psychology study. Past research has found that we tend to favor members of groups we belong to, and disfavor people who belong to other groups. For example, Piercarlo Valdesolo and David DeSteno conducted a highly cited experiment where they randomly assigned people to groups, and found that people judged the same immoral act (giving a difficult task to someone else and an easy task to yourself) differently based on who was the perpetrator. Giving someone a harder task was judged to be more fair when the person themselves was the perpetrator (self), or when someone from their group was the perpetrator In a recent paper, we tried to replicate their work and see if their findings still held. To our surprise, the results did not replicate the result of the original study—but they nevertheless led us to believe that group identity does create moral hypocrisy. We found that people did not evaluate themselves and their in-group more fairly compared to the out-group and others. However, people who identified more strongly with their group did judge in-group members more fairly. Thus, we did find evidence of moral hypocrisy among highly identified group members.

September: THE IDENTITY-BASED MODEL OF BELIEF

In September we released an “election edition” of the newsletter, where we talked about our updated model of social identity and belief. The model explains how the push and pull between social identity goals and accuracy goals shapes people’s political beliefs, (biased) information processing, and the spread of (mis)information. According to this model, social identity goals can override accuracy goals, and vice versa. Most people value accuracy much of the time. But when people prioritize social ties and belongingness to their party over accuracy, they align their beliefs with other party members rather than facts. In the context of (mis)information, this means that people might ignore factual information if it threatens their positive partisan identity, emphasizing flattering and validating information instead. In lieu of accuracy concerns, people might believe or share false information simply because it reinforces their positive beliefs about their party, or they want to signal their party loyalty. Motivated cognition cannot fully explain partisan differences in belief. Partisan differences can also arise, for example, because people are exposed to different information sources. Of course, people are also motivated to select different information diets or pay attention to some sources over others. So identity often shapes these inputs.

October: POLITICAL POLARIZATION AND HEALTH

Much research on political polarization focuses on how it affects subsequent politics and party lines. In October, we described the link between political polarization and public health. We have found that political polarization poses significant health risks to individuals and society—by obstructing the implementation of legislation and policies aimed at keeping people healthy, by discouraging individual action to address health needs, such as getting a flu shot, and by boosting the spread of misinformation that can reduce trust in health professionals. As individuals move further from the political center—in either direction—there is a deterioration in individual and public health, such as trust in medical expertise, participation in healthy behaviors, and preventive practices, ranging from healthy diets to vaccination. Polarization affects what health information people are willing to believe and shapes the relevant actions they are willing to take. Political leaders, inside and outside the US, may make public health worse by linking health behavior to partisan identity rather than medical needs or expert advice, thereby undercutting the role of expertise and ignoring approaches grounded in science, often leading to attacks on medical professionals and the healthcare system. However, policy and leadership decisions can mitigate the potential harm from polarization.

November: HOW SOCIAL MEDIA CREATES A FUNHOUSE MIRROR

In November, we discussed our review on why social media seems to depict a twisted wonderland version of reality compared to what people experience offline. We argued that social media acts like a funhouse mirror, exaggerating and amplifying certain voices and behaviors while diminishing others. This warping of reality happens because social media platforms are dominated by a small, vocal minority of users who post extreme opinions or content. Algorithms further amplify these extremes, giving people the impression that such views and behaviors are the norm, even when they are not. Solving this problem requires changes at both individual and systemic levels; social media platforms can—and should—prioritize nuanced and accurate content rather than promoting extreme views for engagement. Users need to be more aware of how social media warps their perception of reality. Recognizing that online environments amplify extremes can help mitigate their influence. Finally, more studies are needed to understand how online distortions influence offline behaviors and how to counteract these effects effectively.

December: December’s newsletter is the one you’re reading now! Thank you for coming along with us on our research journey in the past year, and for whatever the future holds for our center.

Other News & Announcements

We have a new paper explaining all the ways you can use natural language processing to analyze text data in Nature Reviews Psychology. We provide user friendly recommendations for using NLP to ensure rigour and reproducibility. Here is a free link to the paper that was co-authored by lab members Claire Robertson, Steve Rathje, and Jay.

Lab postdoc, Steve Rathje, was selected for the prestigious Forbes 30 Under 30 List!! Steve is a psychology researcher who makes TikTok videos about the intersection of social media and psychology for his more than 1 million followers. He has published 34 peer-reviewed articles in publications like Nature, Science and PNAS and is the winner of the Association for Psychological Science's "Rising Star" Award and others.

In other good news, Steve Rathje won the AXA Research Fund Post-Doctoral Fellowship for his research on “Navigating misinformation and trust erosion in the digital age”!

Lab PhD student Danielle Goldwert won the grand prize for students in APA’s science poster competition, for her poster titled “The Differential Impact of Climate Interventions along the Political Divide in 60 Countries”! It was based on our recent paper in Nature Communications. Congrats to Danielle!

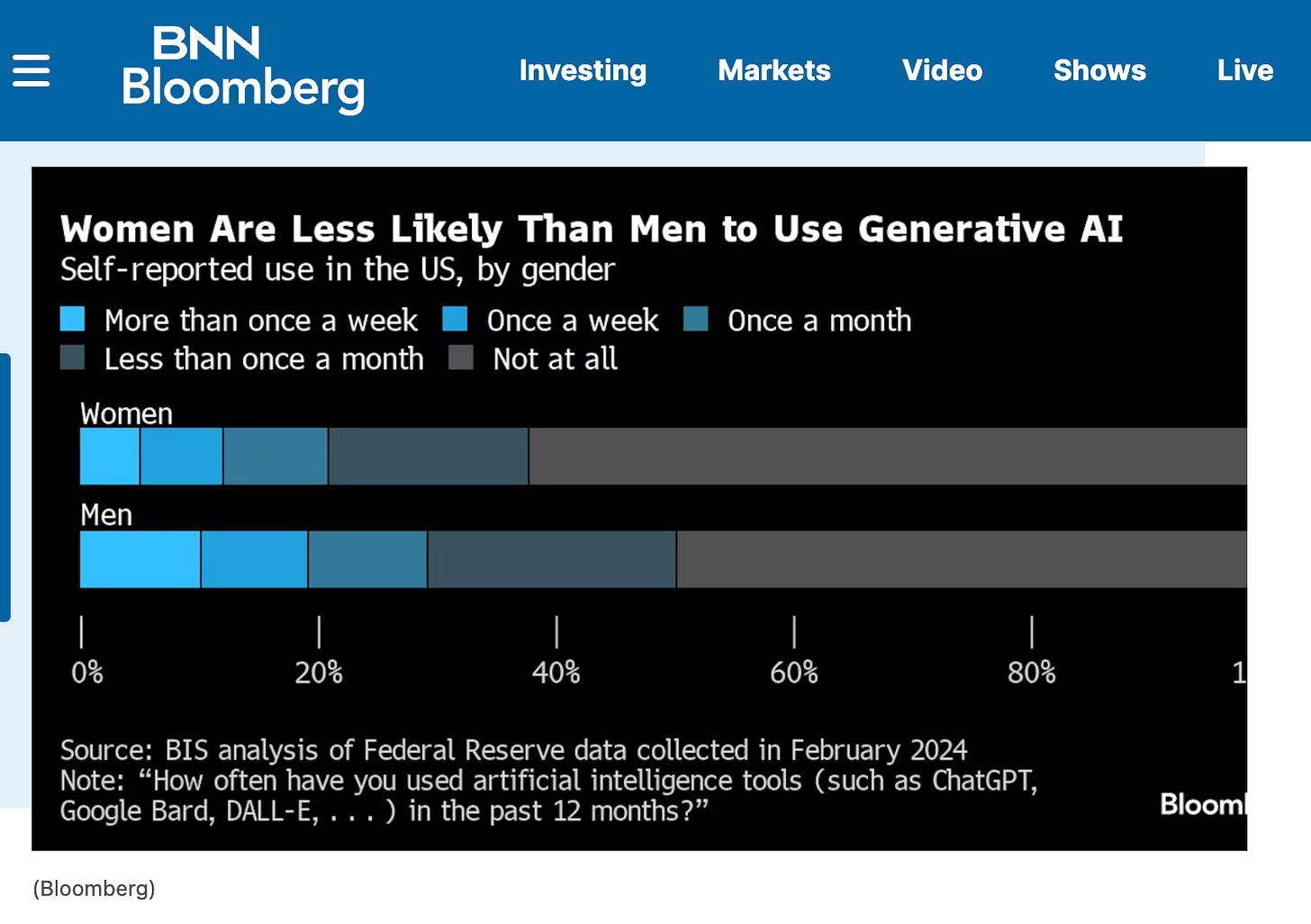

Lab postdoc Laura Globig was interviewed by Bloomberg News regarding her research on genAI, on how women are less likely to use generative AI tools and why. You can read the full article here!

Jay was named to the Ambassador Council of the Alliance for Decision Education, which are helping to build a broad understanding of why decision education in schools can improve individuals and society.

If you have any photos, news, or research you’d like to have included in this newsletter, please reach out to our Lab Manager Sarah (nyu.vanbavel.lab@gmail.com) who puts together our monthly newsletter. We encourage former lab members and collaborators to share exciting career updates or job opportunities—we’d love to hear what you’re up to and help sustain a flourishing lab community. Please also drop comments below about anything you like about the newsletter or would like us to add.

And in case you missed it, here’s our last newsletter: