How Social Media Warps Your Reality

What you see online isn't necessarily representative of the real world

Did you know that:

Only 3% of social media accounts are toxic, but they produce 33% of the content.

A mere 0.1% of users are responsible for sharing 80% of fake news.

74% of all online conflicts begin in just 1% of online communities.

What you are seeing online does not reflect what most people believe. In our most recent paper, we argue that social media acts like a funhouse mirror, exaggerating and amplifying certain voices and behaviors while diminishing others. This warping of reality happens because social media platforms are dominated by a small, vocal minority of users who post extreme opinions or content. Algorithms further amplify these extremes, giving people the impression that such views and behaviors are the norm, even when they are not.

A small percentage of users generate the majority of content, and their posts tend to be extreme, polarizing, or highly emotional. Meanwhile, the majority of people who ‘lurk’ on the internet either do not post or post moderate opinions far more infrequently; thus, extreme opinions are overrepresented in the online space.

How social norms become distorted online

Social norms are the unwritten rules that dictate acceptable behavior within a group or society. Norms are strongly tied to our social identity- what norms people follow often signals what social group they belong to, and strengthens ties with ingroup members. Humans naturally rely on cues from their environment to form a sense of these norms. In offline settings, this process—called ensemble encoding—helps us efficiently interpret social signals by summarizing the average behavior or opinions within a group.

However, on social media, this process becomes distorted because the environment is skewed. A small percentage of users generate the majority of content, and their posts tend to be extreme, polarizing, or highly emotional. Meanwhile, the majority of people who ‘lurk’ on the internet either do not post or post moderate opinions far more infrequently; thus, extreme opinions are overrepresented in the online space. For instance:

This small, vocal minority effectively shapes the "norms" people perceive online, leading to what psychologists call pluralistic ignorance—where people incorrectly believe that these exaggerated online norms represent what most others think or do offline.

What are the norms that shape online discourse?

The content promoted on social media is dominated by the most extreme opinions which are often highly engaging—either because they spark outrage, debate, or surprise. Negative and divisive content is particularly likely to go viral, as algorithms prioritize posts that generate high engagement (likes, shares, and comments). For example:

News stories that express hostility toward political out-groups are more likely to be shared.

On platforms like Instagram, extreme beauty standards are reinforced through highly curated and filtered images, leading users to believe that unrealistic appearances are the norm.

This skewed environment can have harmful consequences, such as:

Influencing Real-Life Behaviors:

For example, teens exposed to extreme drinking content online may believe that binge drinking is normal and acceptable, increasing the likelihood of risky behavior.

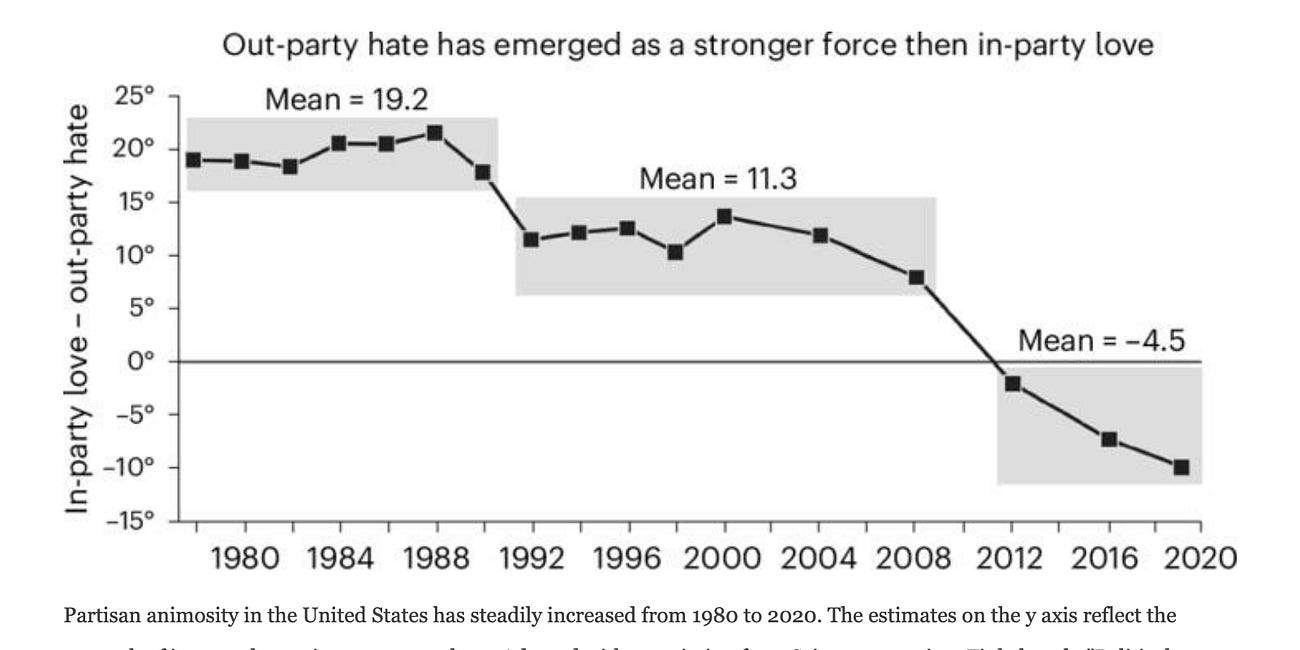

Exaggerating Political Divisions:

Users often believe their political opponents hold more extreme views than they do in reality, leading to hostility and polarization.

Reinforcing Unrealistic Expectations:

Social media feeds filled with perfect success stories or idealized lifestyles can leave people feeling inadequate and promote mental health issues like depression and anxiety.

Why false norms are worse online than offline

Social media platforms profit by keeping users engaged which creates an attention economy. The algorithms that govern our feeds are designed to maximize engagement by showing users content that elicits strong emotions and keep us online. This creates a feedback loop where users post more extreme content to gain attention.

Posts that are divisive or sensational often perform better, incentivizing the spread of distorted, negative, or extreme content. Social media also allows users to post anonymously or with minimal accountability, making hostile or extreme behaviors more visible. Meanwhile, moderate opinions are often invisible or outright attacked by trolls or content farmers, leading to a warped perception of public sentiment.

What Can Be Done?

Solving this problem requires changes at both individual and systemic levels:

Platform Design: Social media platforms can—and should—prioritize nuanced and accurate content rather than promoting extreme views for engagement.

Critical Thinking: Users need to be more aware of how social media warps their perception of reality. Recognizing that online environments amplify extremes can help mitigate their influence.

Further Research: More studies are needed to understand how online distortions influence offline behaviors and how to counteract these effects effectively.

The "funhouse mirror" of social media creates a world where extremes dominate and moderation or nuance is often invisible. This distortion harms individuals and society by promoting false norms, increasing polarization, and fostering mental health issues.

Without interventions, these online distortions could continue to shape a false sense of reality. There is an urgent need for better awareness and structural changes to ensure that social media reflects a more accurate picture of the world.

Robertson, C.E., del Rosario, K.S., Van Bavel, J.J (2024). Inside the funhouse mirror factory: How social media distorts perceptions of norms. Current Opinion in Psychology, 60, 101918, https://doi.org/10.1016/j.copsyc.2024.101918.

Projects and Publications

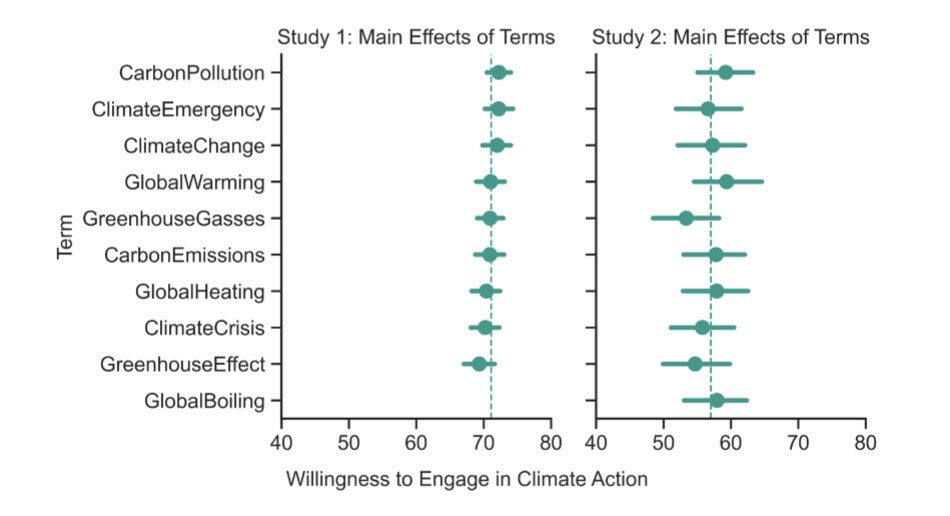

We recently published a paper showing that climate change terminology does not make a difference when encouraging people to take climate action. Journalists and activists have created a number of terms to try and raise awareness about the dangers of global warming, such as ‘climate crisis’ and ‘global boiling’. However, in a global mega-study (N = 6,132 across 63 countries) we conducted on climate beliefs and actions, none of these terms had an effect on whether people chose to take action against climate change! This paper was led by our PhD student Danielle Goldwert, former postdocs, Kim Doell and Madalina Vlasceanu, and Jay Van Bavel, and published in the Journal of Environmental Psychology. You can read it in full here.

News and Announcements

Jay was named one of the most highly cited researchers in the world by Clarivate Analytics. This award recognizes that “each researcher selected has authored multiple Highly Cited Papers™ which rank in the top 1% by citations for their field(s) and publication year in the Web of Science™ over the past decade… This list, based on citation activity is then refined using qualitative analysis and expert judgment as we observe for evidence of community-wide recognition from an international and wide-ranging network of citing authors.” Congrats to Jay and all our lab members and collaborators who contributed to these papers!

This summary of our initial article was generated by ChatGPT and edited by Sarah Mughal and Jay Van Bavel. If you have any photos, news, or research you’d like to have included in this newsletter, please reach out to our Lab Manager Sarah (nyu.vanbavel.lab@gmail.com) who puts together our monthly newsletter. We encourage former lab members and collaborators to share exciting career updates or job opportunities—we’d love to hear what you’re up to and help sustain a flourishing lab community. Please also drop comments below about anything you like about the newsletter or would like us to add.

And in case you missed it, here’s our last newsletter:

That’s all for this week, folks - take care, and we’ll see you next month!

It should never been allowed in the first place. Now that it has, what’s called for is strict regulation.

The socio-economic tragedy of the late 1990s was the giving over of the internet entirely to unregulated commercial activity. Throughout, the privatization of the internet _ought to have been_ handled like a regulated public utility. But it wasn’t, and so here we are.

It is not just the algorithms. You get an awful lot of crazed action from the uncurated commenters too. I bailed from Facebook a decade ago and have never been in X or any of the video platforms like TikTok or Instagram so my perspective is from MSM and the print portions of the Internet. A lot of these platforms don't allow comments but when they do I often read them to try to take the pulse. Even when the owners are posting relatively civilized, if sometimes controversial pieces, the commenters are often just off the wall especially when it comes to basic norms. I have abandoned some of my regular read and comment platforms after watching people call each other morons or other things that would be considered fighting words if you did it in person. Lots of people advocating violence too. While some of this is undoubtedly trolls, I have been doing this for years and have a long memory about prior posts. It could be that they are consuming social media out of my line of sight and adopting attitudes from that but I find little evidence like links. What links that do exist are generally amusing or downstream links from MSM.