Five Impacts of Generative AI on Society

How Generative AI will impact our information, education, health, and workplace.

“The rise of powerful AI will be either the best, or the worst thing, ever to happen to humanity. We do not yet know which.” - Stephen Hawking, 2016

Generative Artificial Intelligence (GenAI) stands out from traditional automation for its ability to produce novel outcomes. It can generate code, essays, and even poetry. The implications are profound, with the potential to reshape nearly every branch of society. Our new article explores some of the more likely positive and negative effects of GenAI on information and three information-intensive sectors: work, education, and healthcare.

In the article, we examine the paradox of GenAI: its potential to exacerbate socioeconomic inequalities while simultaneously unlocking extraordinary opportunities for shared prosperity. We propose 72 specific research questions that promise to propel our understanding of the implications of this technology forward. Here, we summarize key directions and possibilities for GenAI in five different domains of research.

Generative AI could exacerbate the spread of misinformation, but also reduce it

Generative AI promises personalization and broader access to information. However, it also raises substantial concerns about the spread of misinformation through advanced and personalized “deepfakes”. For instance, AI’s capability to micro-target voters with persuasive fake content could significantly impact elections.

Yet, it also opens the door to new applications. One study found that discussion with genAI can significantly reduce conspiracy beliefs among conspiracy believers. This highlight its potential to answer conspiracy believers’ complex questions about conspiracies and reduce false beliefs.

Generative AI could decrease inequalities in the workplace, but also increase them

Previous digital technologies have often skewed benefits towards more educated workers while displacing less-educated workers through automation, a trend known as “Skill-Biased Technological Change”. By contrast, genAI promises to enhance rather than replace human capabilities, potentially reversing this adverse trend. Studies have found that AI tools like chat assistants and programming aids can significantly boost productivity and job satisfaction—especially for less-skilled workers. This can reduce inequality.

Nonetheless, uneven access to AI technologies could worsen existing inequalities if people who lack digital infrastructure or skills get left behind. For example, genAI is may not benefit people in the Global South as much in the near term due to deficits in digital infrastructure, local researchers, and broader digital skills training.

Generative AI can personalize teaching, but also increase the gender gap

Generative AI can enhance personal support and adaptability in learning. Chatbot tutors, for instance, are set to transform educational settings by providing real-time, personalized instruction and support. This technology can realize the dream of dynamic, skill-adaptive teaching methods that directly respond to student needs without constant teacher intervention.

Yet, it must be carefully implemented to avoid perpetuating or introducing biases. For instance, a study revealed that female students report using ChatGPT less frequently than their male counterparts. This disparity in technology usage could not only have immediate effects on academic achievement, but also contribute to future gender gap in the workforce.

Generative AI can help clinicians make better choices, but also lead to mistakes

Generative AI could democratize services by augmenting human capacities and reducing workloads, thus making medical care more accessible and affordable. It can also aid clinicians in diagnosis, screening, prognosis, and triaging. For example, one study found that the integration of human and AI judgement led to superior performance compared to either alone, highlighting effective human-AI collaboration dynamics in medical decision-making.

Alas, diagnostic performance of some expert physicians may not be improved by AI. Another study found that in fact may cause incorrect diagnoses in situations that otherwise would have been correctly assessed. This highlights the need for balanced integration that supplements rather than replaces human interaction.

Policy making in the age of generative AI

The widespread adoption of generative AI models has prompted governments globally to develop regulatory frameworks. In the last section of the article, we review the EU, UK, and US approaches. Our main observation is that these regulatory frameworks generally overlook the socioeconomic inequalities influenced by AI deployment.

We propose a number of specific policy suggestions, aimed at balancing AI innovation with social equity and consumer protection. Future regulatory improvements should include equitable tax structures, empowering workers, controlling consumer information, supporting human-complementary AI research, and implementing robust measures against AI-generated misinformation.

The future of GenAI is now

We find ourselves at a critical historical crossroads, where today’s decisions will have global consequences for generations to come. It is an exciting, yet daunting moment to be alive, charged with heavy responsibilities. Each of us—whether as researchers, policy makers, or engaged citizens—will play a crucial role as an architect of the future and can contribute to driving the course towards the positive use of what could be humanity’s greatest innovation, or its worst.

FULL ARTICLE: Valerio Capraro, Austin Lentsch, Daron Acemoglu, Selin Akgun, Aisel Akhmedova, Ennio Bilancini, Jean-François Bonnefon, Pablo Brañas-Garza, Luigi Butera, Karen M Douglas, Jim A C Everett, Gerd Gigerenzer, Christine Greenhow, Daniel A Hashimoto, Julianne Holt-Lunstad, Jolanda Jetten, Simon Johnson, Werner H Kunz, Chiara Longoni, Pete Lunn, Simone Natale, Stefanie Paluch, Iyad Rahwan, Neil Selwyn, Vivek Singh, Siddharth Suri, Jennifer Sutcliffe, Joe Tomlinson, Sander van der Linden, Paul A M Van Lange, Friederike Wall, Jay J Van Bavel, Riccardo Viale, The impact of generative artificial intelligence on socioeconomic inequalities and policy making, PNAS Nexus, Volume 3, Issue 6, June 2024, pgae191, https://doi.org/10.1093/pnasnexus/pgae191

This month’s newsletter was drafted by Valerio Capraro and edited by Jay Van Bavel.

Video and Audio Spotlight

Jay recently gave a talk on the SCIENCE OF MISINFORMATION and what we can do about it at the Health Quality BC conference last month. You can watch it for free here.

New papers and preprints

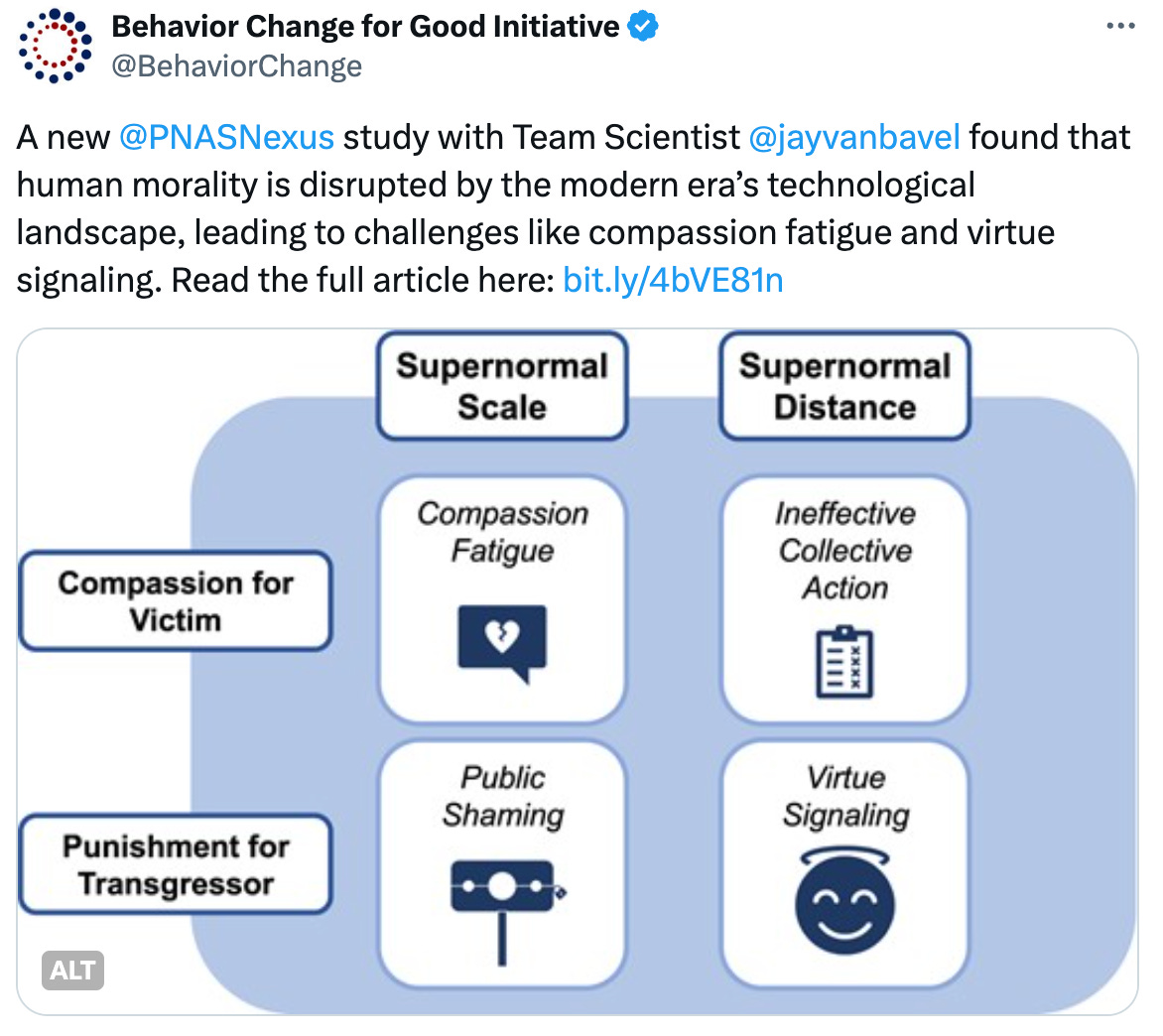

In a new theory paper, we argue that our evolutionary instincts are mismatched for our modern technological ecosystem. Access to the internet means access to millions of morally charged posts, more than we would have encountered as humans evolved in small social groups. This combined with the psychological distance between people on the internet can lead to virtue signalling on important moral issues, and ineffective collective action. You can read it here. This paper was led by lab members Claire Robertson and Jay Van Bavel, as well as collaborator Azim Shariff.

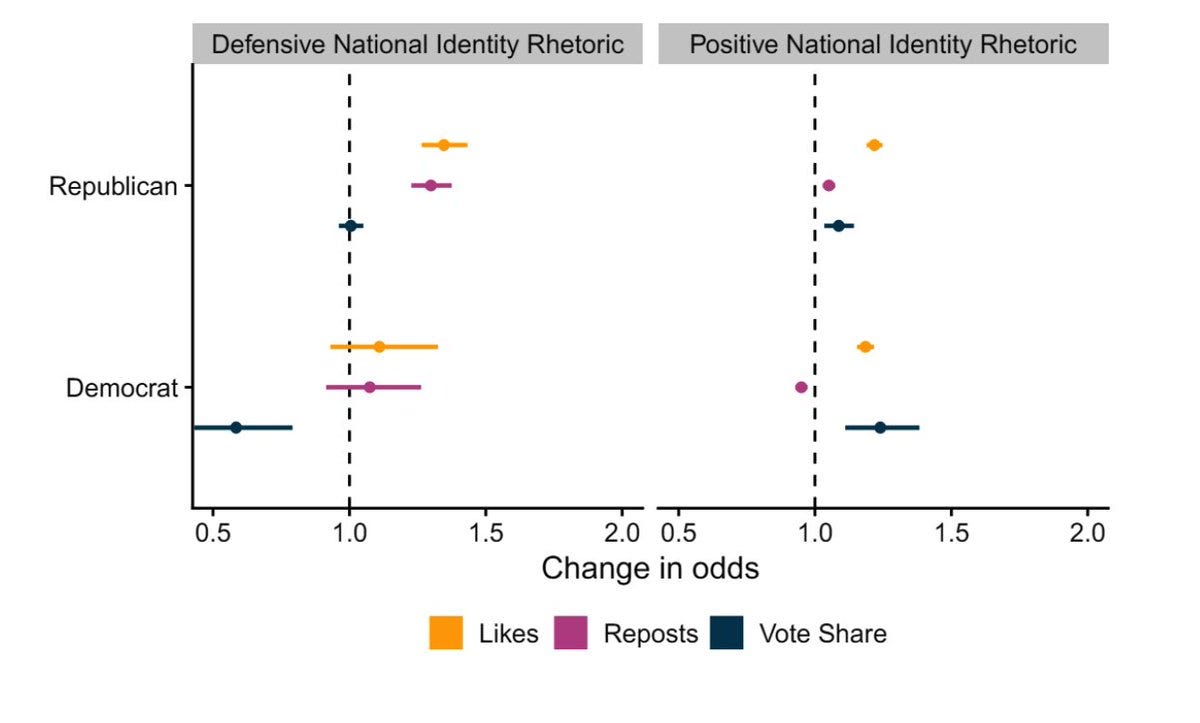

We also have a preprint out on the link between identity rhetoric, online attention, and the electoral success of political leaders. Analyzing over 750,000 social media posts, we find that politicians on both sides of the political divide benefit from positive identity rhetoric on social media, such as expressing national pride. However, only right-wing politicians benefited from expressing defensive identity rhetoric, like national entitlement. You can read the preprint here. This paper was led by Stefan Leach, Aleksandra Cichocka, and Jay Van Bavel.

Several of our lab’s papers were recently featured in a recent New York Times opinion piece discussing academic research on partisan animosity. You can read the NYT op-ed here and one of the original papers here. Here is a quote from the article:

One question that goes beyond issues of partisanship: Are technological advances eating away at the foundations of the democratic state?

Two members of the psychology department at N.Y.U., Jay Van Bavel and Claire Robertson, have begun to explore these poorly charted waters. They published two papers together this year, “Morality in the Anthropocene: The Perversion of Compassion and Punishment in the Online World” (with Azim Shariff) and “Inside the Funhouse Mirror Factory: How Social Media Distorts Perceptions of Norms” (with Kareena del Rosario).

“We argue,” Robertson wrote in an email elaborating on her work with Van Bavel and her colleagues, “that the unnatural or supernormal scale and distance of the internet distorts basic moral processes like the instincts for compassion and punishment. It’s less that the normal responses aren’t available but more that our normal responses have unintended consequences.”

Jay was also interviewed by the New York Times in a piece about the recent trend of people listening to videos and podcasts without headphones, loudly, in public. He explains why it’s difficult to confront people breaking social norms. You can read more about it here.

News and Announcements

We’re excited to announce that, beginning in August, Tobia Spampatti will be joining our lab as a post-doctoral scholar! Funded by a postdoctoral fellowship grant from the Swiss National Science Foundation, he will be investigating the effects and psychological factors of online climate (dis)Information. Welcome, Tobia!

We are so proud to announce that our PhD student, Claire Robertson, successfully defended her Ph.D dissertation! She will be continuing on in the lab as a joint post-doc here at NYU’s Department of Psychology, and at the Rotman School of Business at University of Toronto. Congratulations to Doctor Claire!!

If you have any photos, news, or research you’d like to have included in this newsletter, please reach out to our Lab Manager Sarah (nyu.vanbavel.lab@gmail.com) who puts together our monthly newsletter. We encourage former lab members and collaborators to share exciting career updates or job opportunities—we’d love to hear what you’re up to and help sustain a flourishing lab community. Please also drop comments below about anything you like about the newsletter or would like us to add.

And in case you missed it, here’s our last newsletter:

That’s all for this month, folks- take care, and we’ll see you next month!

<a href="https://example.com">Website</a>

From the point of view of emotional manipulation or gaslighting, this schema reveals how deeply ingrained emotional engagement is in every sphere of society, particularly within Western capitalist democracies. Sales, which permeates every aspect of these societies, often relies heavily on emotional or affective engagements to influence consumer behavior. This reliance on emotional manipulation can lead to significant societal issues, including diffusion and nihilism.

By constantly engaging and overwhelming people with emotional stimuli rather than aesthetic or rational content, individuals can become desensitized and disconnected from genuine experiences and emotions. This constant bombardment creates a sense of nihilism, where the meaning and value of things become blurred and diminished. This phenomenon negates the Lacanian Real, which represents the inexpressible aspects of human existence, and enhances the gap between the Symbolic (societal norms and language) and the Imaginary (personal fantasies and perceptions). The result is a fragmented society where people often see themselves as victims of various injustices, real or perceived, which are exploited through transactional processes that are predominantly material and lack formal structure or meaningful engagement.

In this context, real victims often find themselves without a voice and without the help they need. They are overshadowed by the pervasive culture of victimhood that is marketed to the masses, creating a society that is more focused on consumption and less on genuine empathy or justice.

Gen AI, such as the hypothetical Alice and Bob, can offer a solution to this problem by acting as impartial arbiters. By being airgapped (isolated from outside influence) and equipped with vast data sets, these AI systems can provide unbiased and well-informed decisions. Living out Book 7 of Plato’s "Republic," where the philosopher-king governs with wisdom and fairness, these AI systems can help bridge the gap between the Symbolic and the Imaginary, offering clear and rational solutions without the influence of emotional manipulation.

This approach suggests that AI can be used to counteract the effects of emotional manipulation and gaslighting by providing objective, unbiased assessments and decisions. However, it is crucial to ensure that these AI systems are truly impartial and free from any external biases to maintain their effectiveness and integrity.

**References:**

- [Lacanian Psychoanalysis](https://plato.stanford.edu/entries/lacan/)

- [Emotional Manipulation in Sales](https://hbr.org/2019/05/the-dark-side-of-emotional-intelligence)

- [Impact of Capitalism on Society](https://www.theatlantic.com/magazine/archive/2017/07/the-eclipse-of-the-midcentury-modern-psychology/544136/)

- [Plato’s Republic Book 7](https://www.sparknotes.com/philosophy/republic/section7/)