The Misleading Count: Changing the design of social media to fight misinformation

A social norm-based intervention is 5X more effective than other popular interventions at reducing the spread of misinformation

Do you ever notice that some of the most liked and engaged with posts on your social media feeds, are also posts filled with misinformation? Misinformation is a serious problem on nearly all social media sites. On X (formerly known as Twitter), false information spreads faster and deeper compared to true stories–especially if they’re concerned with emotional and political topics. And a recent analysis found that 1 in 5 news posts on TikTok contained misinformation. It was even worse for news about covid vaccines and threats to democracy, affirming prior evidence that the spread of false content can have negative consequences for democracy and public health.

Scholars and platforms have developed different interventions to counter misinformation on social media, but these interventions are often less effective against misinformation that is politically polarizing. To find a better solution, we recently tried to develop an intervention that might work better against polarized content. In a recent paper led by lab alum Clara Pretus, along with Ali Javeed, Diána Huges, Kobi Hackenburg, Manos Tsakiris, Oscar Villaroya, and Jay Van Bavel, we developed and tested an identity-based intervention against misinformation on social media.

Online moderation is one way to deal with the problem of misinformation, but online moderators often are not familiar with the context of posts or respond to the content long after it has already gone viral. Other interventions such as accuracy nudges (where social media users see visual cues that remind them to be accurate) have also been developed, but a pair of recent meta-analyses find that their effect is relatively weak—especially for conservatives. Thus, a scalable, context-sensitive intervention that is effective across political groups is needed.

We have previously found that people are more likely to believe and share misinformation when they are more motivated by their partisan identity than accuracy goals. However, online misinformation is also set in the context of social media’s interactive environment, where engagement metrics, such as likes, can incentivize (and monetize) the spread of misinformation. Therefore, we tried to design a solution that would incentivize more accurate content by signaling healthier, social norms around reporting fake news.

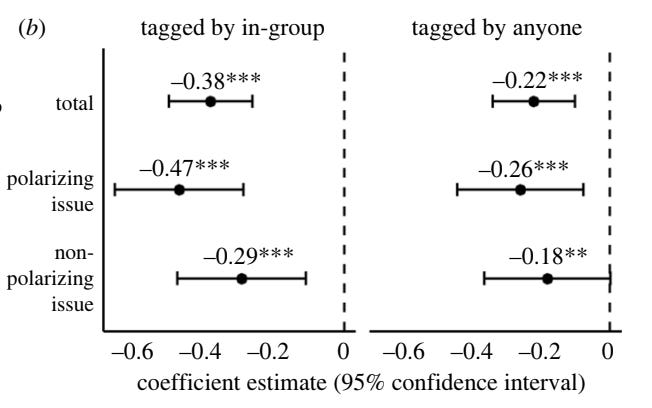

Specifically, we developed a “Misleading” count added to social media posts (see bottom right in figure below). We simply showed how many in-group members of a user’s political party marked a social media post as misinformation on each social media post (similar to a like or share button). This identity based intervention was tested in both the US and the UK, in three pre-registered online experiments (N = 2513).

Participants across the political spectrum were asked how likely they would be to share simulated social media posts by different political leaders from their political parties. These posts contained misinformation about politically polarizing issues (e.g., immigration, homelessness, etc). One group of participants were told that the Misleading count showed judgements from their fellow partisans, and another group were told that it reflected judgements from general users. The authors then compared the results of this intervention to other popular online misinformation interventions, like accuracy nudges and Twitter’s official tag that prevents Tweets from being shared, liked, or reposted.

The number of people who reported they would be likely to share misleading social media posts dropped by 25% in response to the Misleading count showing in-group judgements, as compared with 5% in response to an accuracy nudge.

The in-group Misleading count was also more effective compared to the Misleading count showing the judgment of general users—and it worked especially well for polarizing issues (see figure below). This underscores the power of in-group norms for reducing the spread of misinformation. This effect was only found in the US, however, which is a more politically polarized context compared to the UK. Strikingly, extreme partisanship did not make the intervention less effective.

These results provide initial evidence that identity-based interventions may be more effective than neutral ones for addressing partisan-based misinformation. The misleading count may be an effective, crowd-sourced guard against misinformation online. The fact that it seems to be more effective in polarized contexts like the US suggests that such contexts have more incentives for people to conform to partisan group norms, or have more disincentives for not conforming to group norms.

Our intervention was tested in a series of controlled experiments, using curated social media posts. In order to see if such a button would work on real social media platforms, future research in more ecologically valid contexts is needed. For example, in the real-world, bad actors could use bots to make new accounts and abuse the ‘Misleading’ button on posts that are actually true.

Pretus, C. G., Javeed, A. M., Hughes, D., Hackenburg, K., Tsakiris, M., Vilarroya, O., & Van Bavel, J. J. (2024). The misleading count: An identity-based intervention to counter partisan misinformation sharing. Philosophical Transaction of the Royal Society B, 379, 20230040. doi.org/10.1098/rstb.2023.0040

This month’s newsletter was drafted by Sarah Mughal & edited by Jay Van Bavel.

New Papers & Pre-Prints

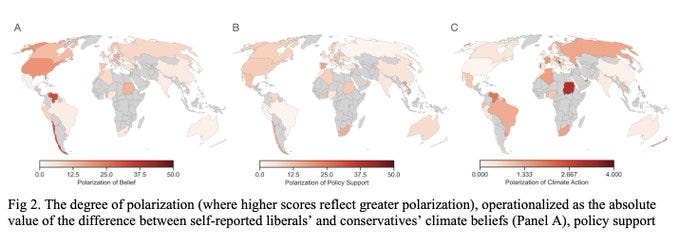

We published a new paper in Nature Communications on how we can we overcome the polarization on climate action. In a global experiment led by Mike Berkebile-Weinberg, Daniel Goldwert, Jay Van Bavel, Kim Doell and Madalina Vlasceanue we tested the impact of 11 interventions on liberals and conservatives in a large sample (N=51,224) recruited in 60 countries. You can read the paper here.

We also wrote an op-ed about this paper for TIME Magazine if you want to read an accessible summary of our research.

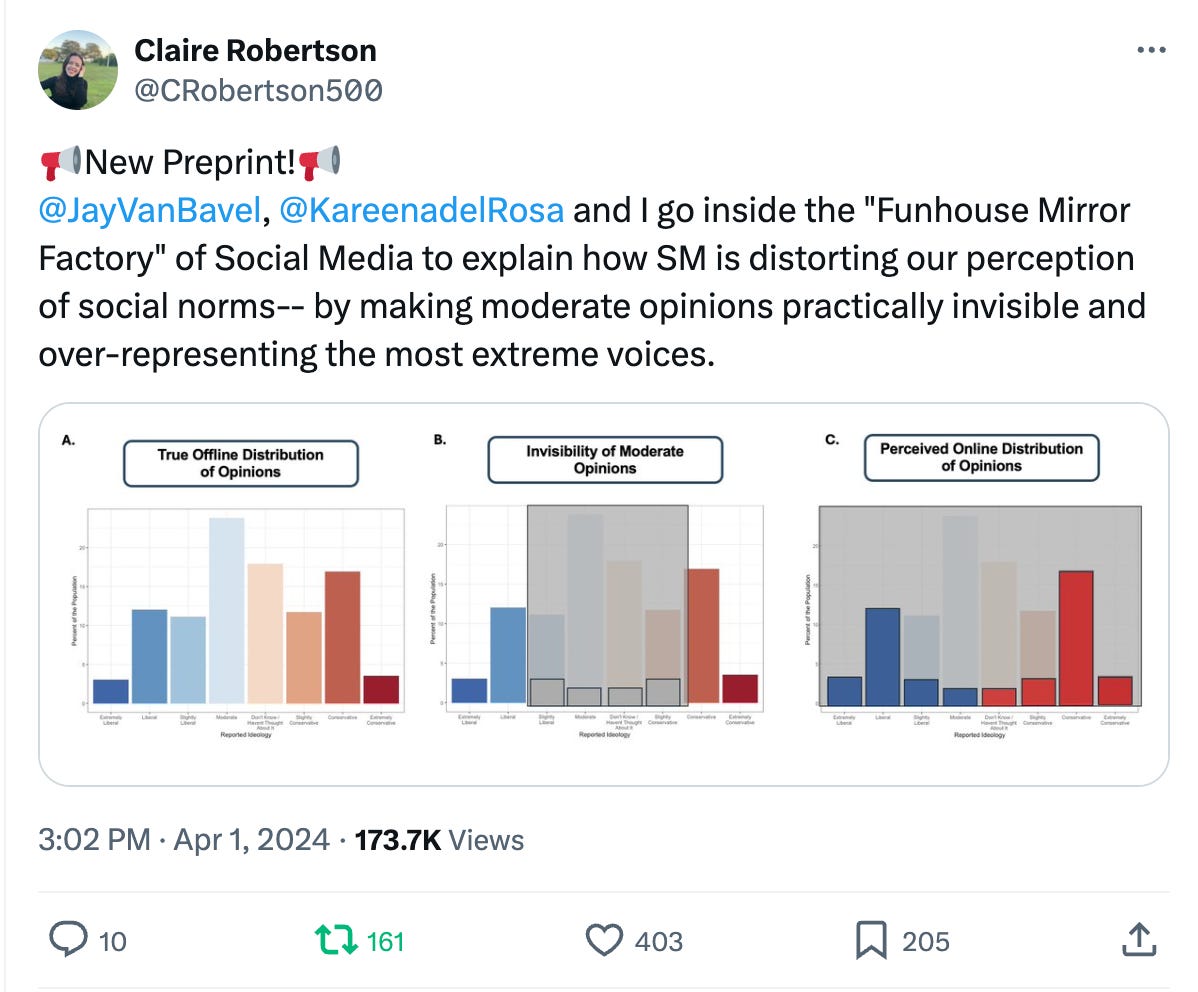

We also posted a new pre-print where we describe how extreme posts on social media distort our perception of what’s normative to believe and what isn’t. The paper brings together research from political science, psychology, and cognitive science to explain how online environments become saturated with false norms, what happens when these online norms deviate from offline norms, and why expressions are more extreme online. This paper was led by Claire Robertson, Kareena del Rosario & Jay Van Bavel, You can read more here.

News, Announcements & Outreach

Congratulations to lab members Rachel Leshin, Kareena del Rosario, and Claire Robertson for winning a number of the NYU Psychology Department’s 2024 Fellowships and Awards! Rachel Leshin was awarded the Fryer Award for Best Thesis, and Claire Robertson received the Ted Coons Graduate Student Summer Stipend Award. Kareena del Rosario received both the Katzell Summer Fellowship and the Coons Graduate Student Travel Award. Congratulations to all!

This month, Jay spoke at an event for the World Bank, discussing how people with the most extreme beliefs post on social media more than people with moderate beliefs, leading to social media algorithms only showing polarizing and divisive content.

He also spoke at the Learning and the Brain conference, talking about the impacts of AI on education to hundreds of educators. Here’s a picture of him and grad student Claire Robertson at our lab’s exhibition booth!

Jay also weighed in on a webinar for PEN America on what journalists can do to counter misinformation in a vital election year. You can read a recap of the webinar here, and watch the Youtube video here:

Jay also was featured on Derek Thompson’s podcast Plain English, where he explained 4 dark rules for online engagement. You can listen to the podcast episode on Spotify.

Jay also spoke with Michael McQueen about polarization, social dynamics, and more, for the Behavior Change for Good Initiative’s Meet the Experts Series! You can watch it here.

If you have any photos, news, or research you’d like to have included in this newsletter, please reach out to our Lab Manager Sarah (nyu.vanbavel.lab@gmail.com) who puts together our monthly newsletter. We encourage former lab members and collaborators to share exciting career updates or job opportunities—we’d love to hear what you’re up to and help sustain a flourishing lab community. Please also drop comments below about anything you like about the newsletter or would like us to add.

And in case you missed it, here’s our last newsletter:

That’s all for this month, folks- thank you for reading, and we’ll see you next month!