Moral Hypocrisy: Social Groups and the Flexibility of Virtue

We attempted to replicate a social psychology study on intergroup moral hypocrisy

Moral hypocrisy refers to how people continue to think of themselves as good, moral beings even after committing immoral acts, reframing these immoral actions to justify this belief. But our sense of morality is not purely individualistic, but also influenced by the social groups we belong to.

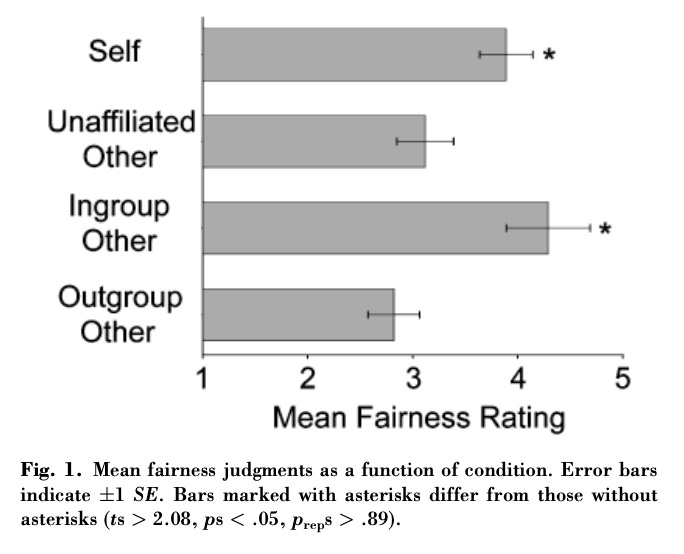

Past research has found that we tend to favor members of groups we belong to, and disfavor people who belong to other groups. For example, Piercarlo Valdesolo and David DeSteno conducted a classic social psychology experiment where they randomly assigned people to groups, and found that people judged the same immoral act (giving a difficult task to someone else and an easy task to yourself) differently based on who was the perpetrator. Giving someone a harder task was judged to be more fair when the person themselves was the perpetrator (self), or when someone from their group was the perpetrator (Ingroup Other, in the figure below). In other words, our moral hypocrisy extends to our fellow group members—we let them off the hook just like we let ourselves off the hook!

In a recent paper, we tried to replicate Valdesolo & DeSteno’s work and see if their findings still held. We also tried to improve the study in three ways by (1) expanding the sample size (and therefore the statistical power); (2) adding new analyses; and (3) extending the experiment to real-world groups (political partisans), to see if the findings held in real life. We also wanted to see whether how strongly people identified with their groups affected their moral hypocrisy towards in-group members.

In our first study, we recruited participants from an online survey platform and randomly assigned them to an “overestimator” or an “underestimator” group regardless of their answers on an estimation task. After discussing their group placement in an online chat with three other participants also assigned into these groups, they completed examples of a green “easy” task, and a red “hard” task.

Participants were then told that the researchers were using a new method to determine what tasks participants would complete, where a small subsample of participants would distribute the tasks. These participants could either use a computer randomizer to randomly assign tasks to participants, or they could choose the task they wanted to complete and the researchers would assign the other task to a different participant. Thus, people who chose the easy task for themselves would be forcing another participant to do the hard task, a moral transgression.

Participants were then randomly assigned into one of four conditions: one where they chose what task to complete themselves (most of them chose the easier task!), and three conditions where they were told another participant was choosing a task for them, and ended up with the harder task. In these three conditions, they either didn’t learn anything about the other participant, learned that the other participant was an in-group member, or learned that they were an out-group member. We then conducted a second study where everything was the same, except instead of assigning participants to random groups, we sorted them based on whether they identified as Democrats or Republicans.

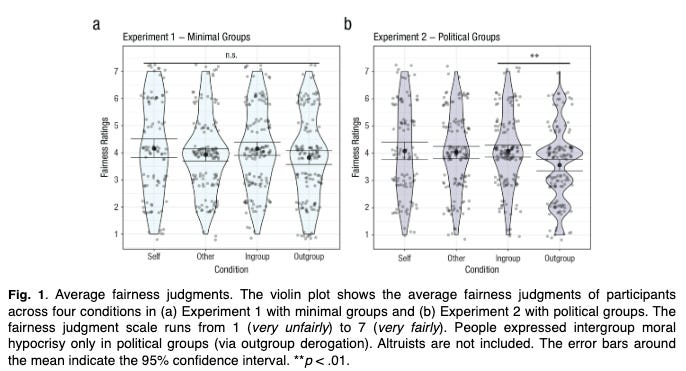

To our surprise, the results did not replicate the result of the original study—but they nevertheless led us to believe that group identity does create moral hypocrisy.

In the random groups experiment, which replicated the original study most closely, we found that people did not evaluate themselves and their ingroup more fairly compared to the out-group and others. However, people who identified more strongly with their group identity did judge in-group members more fairly. Thus, we did find evidence of moral hypocrisy among highly identified group members.

In the experiment with partisans, we again found that people did not evaluate themselves and their ingroup more fairly. However, we found evidence of intergroup moral hypocrisy where people judged their ingroup members as acting significantly more fairly compared to outgroup members. In other words, moral hypocrisy was alive and well among partisans.

While we didn’t perfectly replicate the results of the initial study, we did find evidence that group membership biases our moral judgment. Specifically, people who identified more strongly with a minimal group showed more in-group favoritism. And partisans judged members of the opposing political party more harshly than their in-group members. We found this pattern among both Democrats and Republicans, which aligns with a general trend of increasing affective polarization and partisan sectarianism in the US even in non-political contexts.

Overall, our work suggests that intergroup biases in moral judgements are driven by in-group favoritism in minimal groups, and out-group derogation in partisans. People have a flexible sense of virtue.

This original work was led by Claire Robertson. This summary was written by Sarah Mughal and edited by Jay Van Bavel.

Citation: Robertson, C.E., Akles, M., & Van Bavel, J.J. (2024). Preregistered Replication and Extension of “Moral Hypocrisy: Social Groups and the Flexibility of Virtue”. Psychological Science, 35(7), 798-813.

New Papers and Preprints

Our paper “The Costs of Polarizing a Pandemic: Antecedents, Consequences, and Lessons” was officially published in Perspectives on Psychological Science. In this paper, we discuss how every aspect of the COVID-19 pandemic was polarized, from social distancing to vaxxination, with deadly consequences for public health in the United States. You can read more here. This article was led by Jay Van Bavel, Clara Pretus, Steve Rathje, Philip Parnamets, Madalina Vlasceanu, and Eric Knowles.

Van Bavel, J. J., Pretus, C., Rathje, S., Pärnamets, P., Vlasceanu, M., & Knowles, E. D. (2024). The Costs of Polarizing a Pandemic: Antecedents, Consequences, and Lessons. Perspectives on Psychological Science, 19(4), 624-639. https://doi.org/10.1177/17456916231190395

We also have a new paper out on using GPT for psychological text analysis in PNAS. In this paper, we find evidence that GPT is highly effective in analyzing text for various psychological constructs. We also created a tutorial for researchers to include in their research. You can read more here, and watch the R tutorial on YouTube here. This paper was led by Steve Rathje, Dan-Mircea Mirea, Illia Sucholutsky, Raja Marjieh, Claire Robertson and Jay Van Bavel.

Rathje, S., Mirea, D., Sucholutsky, I., Marjieh, R., Robertson, C., & Van Bavel, J. J. (2024). GPT is an effective tool for multilingual psychological text analysis. Proceedings of the National Academy of Sciences, 121(34). https://doi.org/10.1073/pnas.2308950121.

News and Announcements

Jay was featured on two different panels at the recent APA convention: one on public health and misinformation, and one on how to write a good popular science book. Check out the a video clip below!

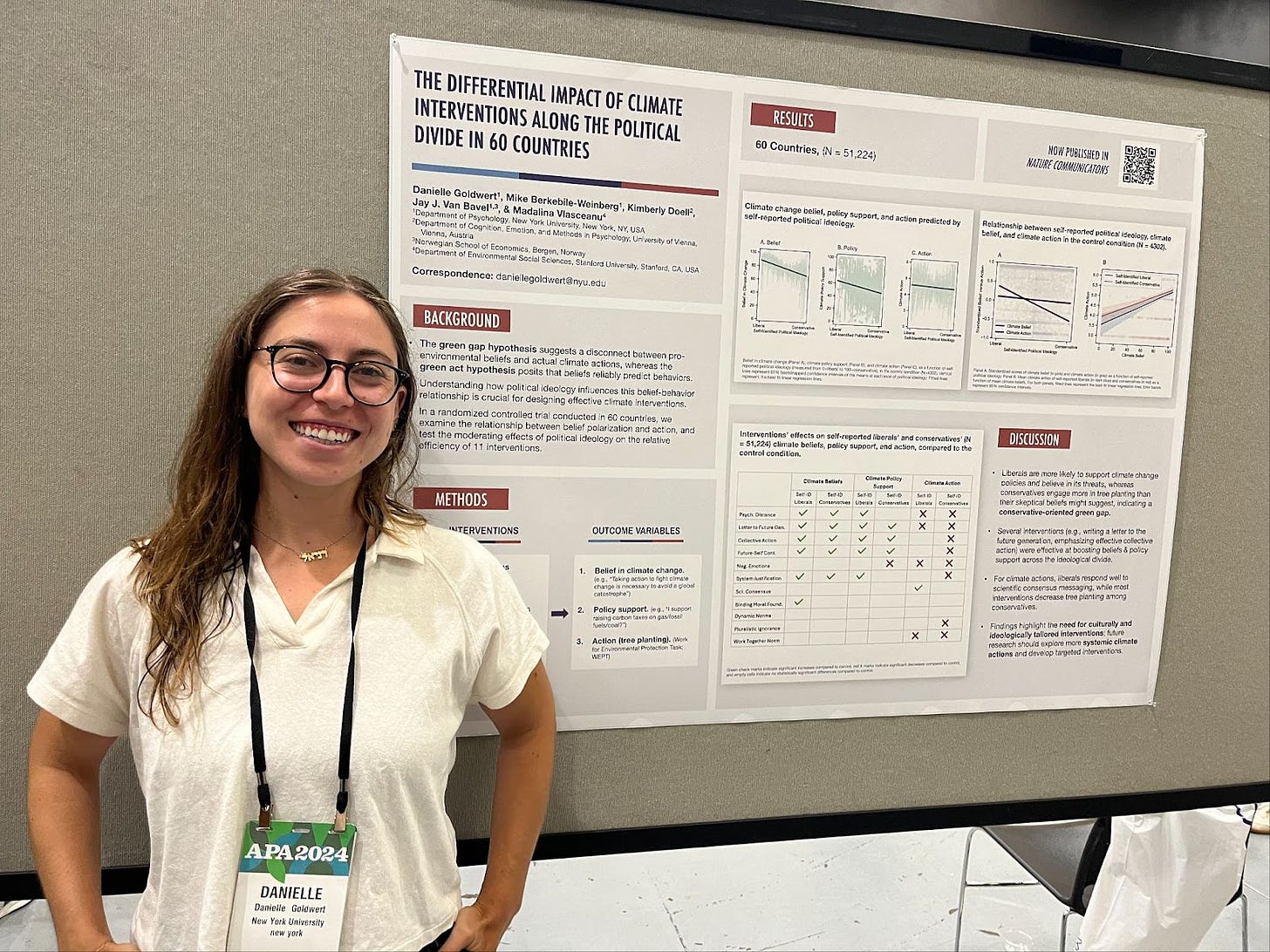

At the same convention, lab member Danielle Goldwert presented a poster based on her paper, “The Differential Impact of Climate Interventions Across the Political Divide in 60 Countries”.

Finally, lab alumni Madalina Vlasceanu won the Raymond S. Nickerson Award from the APA, for her paper “Political and nonpolitical belief change elicits behavioral change”! The Raymond S. Nickerson Award recognizes an article for enduring impact in the area of applied experimental psychology, and for representing an original empirical investigation in experimental psychology that bridges practically oriented problems and psychological theory. Congratulations to Madalina!

Our post-doc Steve Rathje recently wrote a letter to the editor at the New York Times in response to an article on banning smartphones in schools; he wrote on the need for rigorous empirical studies on the use of smartphones in schools before supporting such bans. Read it here!

Jay discussed The Psychology of Identity and Fostering Social Harmony with Scott Barry Kauffman on The Psychology Podcast this week. They discuss how to escape your echo chambers and overcome your biases, the role social media plays in creating a funhouse mirror, and how to make connections with fellow humans even if they're in your out-group.

Lab member Laura Globig will be co-presenting a symposium at SESP’s annual conference. Congrats to Laura on this exciting opportunity!

And in other fun news, our new postdoc Raunak Pillai joined the lab and our research assistant Shaye-Ann McDonald was also visiting NYU from Duke University!

If you have any photos, news, or research you’d like to have included in this newsletter, please reach out to our Lab Manager Sarah (nyu.vanbavel.lab@gmail.com) who puts together our monthly newsletter. We encourage former lab members and collaborators to share exciting career updates or job opportunities—we’d love to hear what you’re up to and help sustain a flourishing lab community. Please also drop comments below about anything you like about the newsletter or would like us to add.

And in case you missed it, here’s our last newsletter:

That’s all for this week folks- see you next week!

Thanks for this post. I have a question regarding your paper, The Costs of Polarizing a Pandemic (BTW I can't read it as I don't have access, and Sci-Hub doesn't seem to have it yet). What do you think the effects of this phenomenon might be on the next pandemic, whether it's a literal one or a metaphorical scenario involving a global threat, lack of info, merchants of attention, uncertainty, and the need for global cooperation?