As we close out the semester with good spirits and holiday cheer, we at the Social Identity and Morality Lab now present a recap of our research from this year. If you would like to read the newsletter from each month in greater detail, please click on the name of each month to read more. Thank you for another great year, and we hope to keep in touch throughout the next.

To kick off the review, here is a meme from one of Jay’s all-time favorite movies—The Big Lebowski.

Publications & New Research

January: Social identity shapes morality

In January’s newsletter, we described a new chapter that proposed a value-based model of decision-making. Specifically, we argued that social identity influences moral cognition by affecting three aspects of decision-making: (1) preferences and goals, (2) expectations, and (3) outcomes that people consider. For example, social identities can affect preferences and goals by leading people to care more about outcomes that affect in-group members compared to outcomes that affect out-group members. This explains how social identity shapes moral judgments and decisions.

February: Social media and vaccine hesitancy

In February, we covered new research on social media behavior and vaccine hesitancy. We found that interacting with low-quality news sources on social media was associated with vaccine hesitancy behaviors, even after controlling for multiple demographic variables. For Americans, following Republican politicians and conservative media sources was also associated with vaccine hesitancy behaviors relative to Democrat politicians—but UK participants did not exhibit such politically polarized behaviors. Vaccine hesitant and vaccine confident people on social media also seemed to cluster into online ‘echo chambers’, which in the US aligned with political networks on social media. The cross-cultural differences between the US and the UK results seem to suggest that both cues from partisan political elites and misinformation sources shaped vaccine attitudes.

March: Morality in the Pandemic

In March, we talked about some of our lab’s findings regarding morality during the COVID-19 pandemic. In one of our papers, we found that three aspects of morality predicted whether or not people would support public health measures to fight the pandemic: moral identity, morality-as-cooperation, and moral circles. These three aspects, as well as benevolence and universalism, were correlated with actual pro-social behaviors during the pandemic.

We had several other publications this month, including a paper that found that people are more likely to read, believe, and share false news if it aligns with their partisan identity (with politically engaged individuals being more susceptible to this effect), and a paper that found that negative words in news headlines drove media consumption, increasing click-through rate by 2.3%. Finally, our paper on how moral beliefs and emotions are a prominent factor that differentiated between feelings of hate and dislike was also published.

April: Political devotion drives misinformation sharing

In April, we discussed our research on political devotion as it relates to the sharing of misinformation online. We found that two aspects of political devotion—identity fusion and sacred values—were related to the spread of misinformation in the far-right. Specifically, far-right partisans in Spain and the US who highly identified with Trump were more likely to share social media posts with misinformation, particularly if the posts had something to do with sacred values like immigration. Importantly, misinformation interventions like fact-checking and accuracy nudges were not effective for these populations, suggesting that strong political beliefs can overpower people’s desire for accuracy. Neuroimaging of 36 far-right partisians in Spain also revealed that misinformation related to sacred values triggered strong neural responses in brain areas involved in norm compliance and theory of mind, which suggests that people might share information related to sacred values in an attempt to conform to their group.

May: Individual-Level Solutions Can Support System-Level Change

In May, we talked about our commentary published in Behavioral & Brain Sciences in response to Chater & Loewenstein (2022)’s article on how focusing on individual solutions to larger problems actively undermines systemic changes. We argued that individual-level solutions don’t necessarily take away from efforts towards systemic change. Specifically, individual behavioral interventions that tap into people’s social identities may help support systemic change, by inducing positive spillover effects that help mitigate the impact of social problems while system-level changes are being implemented. This is because when a decision or behavior is based on a social role or identity positive spillover effects are more likely to occur.

This month, we also posted a preprint examining GPT’s usefulness for psychological text analysis. Compared to traditional dictionary based text analysis, GPT is much better at detecting psychological constructs in text data and performs nearly as well as other fine-tuned machine learning methods (though its performance is poorer for detecting such constructs in African languages and as compared to more recent large language models). We also had a preprint come out showing that, while corrections have a small effect in reducing the spread of misinformation, fact checks of misinformation are more likely to backfire when provided by a political out-group member.

June: Address Societal Challenges with Behavior Change Strategies

In June, we dove into our work investigating the impact of changing beliefs on behaviors. In this paper, we found that new evidence changed people’s beliefs for both political and non-political topics, leading to changes in behavior like donation behaviors. However, there was a strong effect of political partisanship in the results, where only Democrats were able to change other Democrat’s beliefs on topics relevant to their party; political out-group members could not change the beliefs of members of the other out-group, and Republican group members could not change the beliefs of other Republicans. Alternative strategies for belief change may be necessary to change Republicans’ behaviors

This month, we also had a preprint regarding social media and morality, where we find that social media may act as an accelerant for existing moral dynamics, amplifying negative aspects like outrage while also potentially amplifying prosocial behaviors as well.

July: How to motivate people to share more accurate news

July’s newsletter revolved around this paper about what motivates people to share accurate news. We found that small monetary incentives can motivate people to more accurately identify inaccuracies in news headlines, suggesting motivation—not just lack of knowledge—can be a driving factor in the spread of misinformation. The effect of this incentive was stronger for Republicans compared to Democrats, suggesting that Republicans might share more fake news because they are more motivated to believe those false headlines. Money was not the only effective incentive either; social incentives like reputation and emphasizing social norms also increased accuracy in identifying fake headlines. However, other motivational factors (like identifying inaccuracies in news that might be liked by members of one’s political party) can reduce people’s accuracy in identifying misinformation.

August: Does social media drive polarization?

In August’s newsletter, we talked about this theory paper that was published on the SPIR model of social media and polarization. We propose that four factors might determine the relationship between social media and affective polarization: Selection, Platform design, Incentives on social media, and Real-world behavior. People tend to select polarizing information, which social media platforms encourage through the design of the algorithms that govern these platforms. People are also incentivized to post in ways that will garner them a lot of positive social feedback via views, likes and shares. The more likes such posts get the more people will be encouraged to post similarly provoking kinds of content. Finally, social media posts affect offline behavior in both positive and negative ways. For example, both the the #MeToo movement and the January 6th insurrection at the U.S. Capitol originated from online movements.

September: Crowdsourced accuracy judgments can reduce the spread of misinformation

September’s newsletter was about the impact of crowdsourced accuracy judgements on the spread of misinformation. For this paper, our lab developed an intervention called the misleading count. This was an identity-based intervention for misinformation on social media consisting of adding a “Misleading Count” button that showed the number of people who tagged a social media post as misleading next to the Like button. This intervention reduced people’s likelihood of sharing misinformation by 25%, and was especially effective when judgements came from in-group members. This increased effectiveness was driven by the U.S. respondents for the study (suggesting that partisans are more attuned to group norms in polarized contexts), and was just as effective for extreme partisans.

This month, we also published work showing that most people think that social media should not amplify divisive content, but in reality does so. Instead, people think that educational, accurate, and nuanced content should go viral, but rarely does. They tend to think this regardless of their political affiliation or demographics too.

October: Introducing the Center for Conflict and Cooperation

In October, we introduced the Center for Conflict and Cooperation for the very first time! This center, housed at NYU and funded by the Templeton World Charity Foundation, will organize leading scholars to conduct global, large-scale research about the causes and consequences of ethnic, religious, and political polarization. The Center also aims to study effective interventions to reduce such polarization and animosity. Subscribe to this newsletter to stay in the loop about future projects from the center.

We also posted a preprint examining what brain areas are activated when people make certain kinds of judgements, which found that a core set of brain areas make different types of moral judgements, with additional areas recruited for each type. We also found that the same action might be judged very differently depending on the moral lens people used.

November: Why we mis-predict the future: The best-case heuristic

For November’s newsletter, we delved into our research about the ‘best-case’ heuristic’. We asked people to make predictions about their future in three different scenarios: the worst-case scenario, the best-case scenario, and the most realistic scenario. Previous research suggests that one way people might try to accurately guess the future is by averaging across multiple guesses for the future to calculate the most likely scenario. However, when asked to make a realistic prediction for the future, people actually leaned heavily towards a more optimistic best-case scenario over a realistic prediction. What people want to happen comes to mind first, and so that’s what they respond with.

This month, we also published a paper examining the costs of polarizing the COVID-19 pandemic, finding that the enormous death toll of the pandemic in the United States was preventable and largely due to the political polarization of the pandemic. Our lab had a preprint come out about how social media takes advantage of people’s innate tendency to attend to threatening stimuli by promoting content algorithmically that captures attention- against their users’ expressed preferences. We had another preprint regarding updates to the identity-based model of belief which incorporates the most recent research about misinformation as well. We also contributed to a report for the APA and CDC about how psychological science can be used to combat the spread of health misinformation, with 8 recommendations for doing so. Our global many-labs collaboration on the effectiveness of different climate was also officially accepted for publication.

December: Can neuroscience contribute to the fight against climate change?

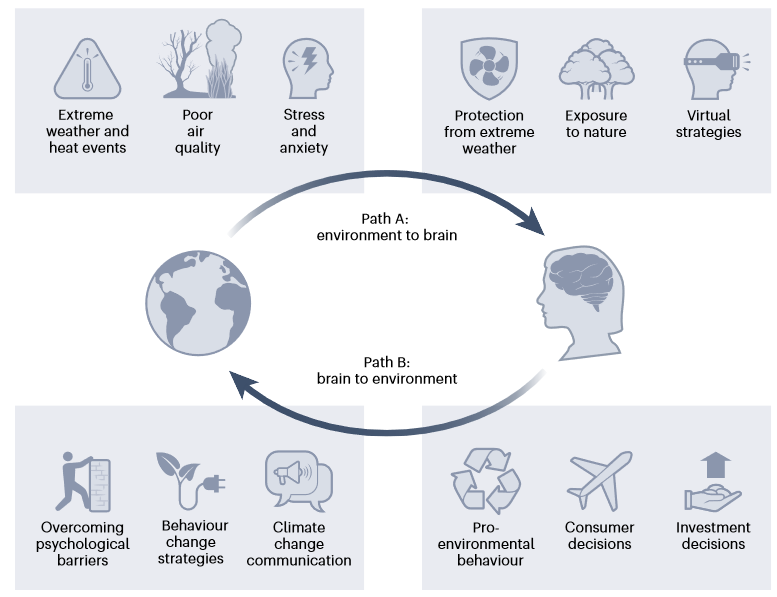

December’s newsletter covered this paper about how neuroscientists can help the fight against climate change through two different paths. Path A: environment-to-brain, covers how neuroscientists can investigate the effects of climate change on the brain and develop strategies to protect it or make it more resilient to these changes. Path B: brain-to-environment, talks about how neuroscientists can investigate the neural basis of the cognitive and affective processes that lead people to engage in pro-environmental behaviors, and help us to understand what drives or inhibits sustainable decisions. We also discuss ways to make the field of neuroscience itself more sustainable.

In December, we published a review in Nature that evaluated the accuracy of social and behavioral policy recommendations during the COVID-19 pandemic. Reviewing over 700 papers, we found that there was evidence for 89% of our central claims, with the most strongly supported claims being those that anticipated culture, polarization and misinformation would be associated with policy effectiveness.

Thank you so much for reading our newsletter this past year! We look forward to seeing you in the next.

In case you missed it, here’s our newsletter from last month: