Does social media drive polarization?

Our new paper provides a model explaining how social media might increase affective polarization. We also have a new lab manager job positing and some other exciting news.

In 2023, nearly 5 billion people worldwide now have a social media account, which they use to learn and share the news, as well as interact with other people. There is a hot debate among scientists, journalists, and the public about the impact of social media. Is it a net benefit for society or is it corrosive to our well-being and democratic institutions?

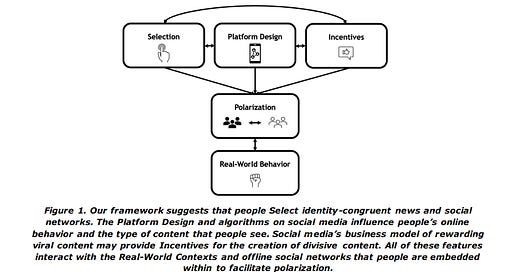

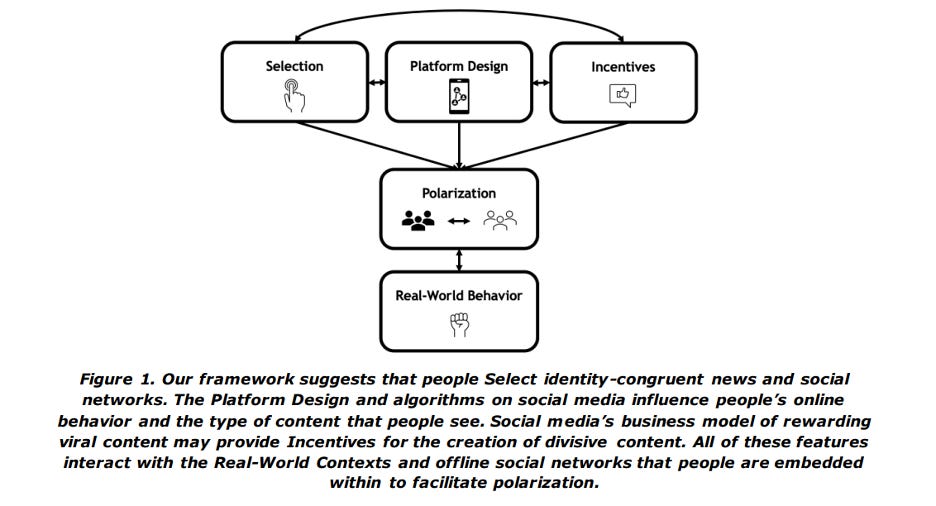

One area we have been studying is whether or not social media causes polarization. Affective polarization is when people from different political parties simply do not like the members of another party. In a new theoretical paper by Elizabeth Harris, Steve Rathje, Claire Robertson, and Jay, we provide a model explaining how social media might amplify affective polarization (although our primary focus was on polarization in the US, we believe the model might be useful in other places and times).

In our model—called the SPIR Model—we describe four factors that explain the relationship between social media and polarization. We discuss (1) the selection that happens on social media, (2) the way social media platforms are designed, (3)the incentives that people are given on social media, and (4) the role of real-world behavior.

Selection

People select polarizing information. There is a ton of information available on the internet. For example, on YouTube alone, users are uploading 500 hours of video per minute. Out of that abundance of information, people tend to read news that fits with what they already believe. People also do this with sources. For example, Democrats are more likely to get news from liberal sources and follow liberal accounts on Twitter, and the opposite for Republicans. Finally, people “select” into groups on social media that match their political identity and ideology. For example, people are three times more likely to follow back Twitter users who share their party membership.

Platform Design

There are a few ways that the design of some social media platforms can exacerbate polarization. One big way is through the use of algorithms. When social media platforms recommend news and videos for people in their newsfeed, they are doing that based on what they know about the person, and they tend to show people things that they would agree with. For example, Facebook seems to learn about a person’s political beliefs through how they use it, and they show information that is in line with those beliefs. Likewise, new research finds that Twitter’s recommendation algorithm amplifies anger, out-group hostility, and affective polarization. Finally, some users on social media are “bots” who use polarizing rhetoric and can cause arguments about divisive topics, like discussions of vaccinations on Twitter.

Incentives

When people post on social media, they’re usually hoping that their post will get a lot of views, shares and likes. It’s this reward that incentivizes people to post in a certain way. Research finds that posts about the other political group (usually negative), and posts that make people feel outraged, are liked and shared the most on social media. The more likes these posts get, the more likely the user is going to keep posting things that cause people to feel outraged. Divisive content may succeed online because it grabs our attention. Users of different social media platforms have learned that, if they want to go viral, it is best to post something attention grabbing and negativity about the other political party seems to spread widely.

Real-world Behavior

Social media posts can really affect how people behave offline. For example, the U.S. conspiracy theory group QAnon grew dramatically online and, at one point, had as many as 30 million followers. In January 2021, QAnon’s online posts and community led to an insurrection at the U.S. Capitol committed by people who believed the fake news that the U.S. presidential election was stolen. Although, it is important to remember that social media can also lead to positive offline behavior, such as helping people organize protests for the #MeToo movement and the Arab Spring.

Our article summarizes a growing literature on the impact of social media on intergroup polarization. Our theoretical framework can be used to inform both theoretical accounts of social media’s relationship to polarization and real-world solutions for reducing polarization on social media. We have been using this framework to try and identify more effective strategies for reducing conflict and the spread of misinformation.

Note that we are talking about social media platforms in general, but they are all different. For example, researchers observed polarizing social dynamics on Facebook and Twitter but not on Reddit, Gab, and WhatsApp. This month, Facebook launched Threads with the aim of making social media a healthier environment. That remains to be seen, but thinking about this issues could be helpful for designing a healthier social media environment for countless people.

New Papers and Preprints

Our research was recently featured in a New York Times article called “The politics of delusion have taken hold”. Here is a quote:

There are other problems with efforts to lessen the mutual disdain of Democrats and Republicans.

A May 2023 paper by Diego A. Reinero, Elizabeth A. Harris, Steve Rathje, Annie Duke and Jay Van Bavel, “Partisans Are More Likely to Entrench Their Beliefs in Misinformation When Political Out-Group Members Fact-Check Claims,” argued that “fact-checks were more likely to backfire when they came from a political out-group member” and that “corrections from political out-group members were 52 percent more likely to backfire — leaving people with more entrenched beliefs in misinformation.”

In sum, the authors concluded, “corrections are effective on average but have small effects compared to partisan identity congruence and sometimes backfire — especially if they come from a political out-group member.”

The rise of contemporary affective polarization is a distinctly 21st-century phenomenon.

How susceptible are you to misinformation? There’s a test you can take. Our former research assistant, Yara Kyrychenko, just published a Misinformation Susceptibility Test (MIST). This new misinformation quiz finds that, despite the stereotype, younger Americans have a harder time discerning fake headlines, compared with older generations. You can read a nice description of their research in the latest Scientific American issue.

What motivates people to share more accurate news? In our last newsletter, we discussed several new papers and findings about misinformation and more.

Videos and News

Interested in social identity, morality, and collective cognition? Madalina Vlasceanu and Jay are hiring a new LAB MANAGER who will work jointly between the two labs! If you know anyone who is interested in topics like conflict, cooperation, misinformation, polarization, climate change, please tell them to join us! Applications will be accepted until the position is filled, so please encourage them to apply ASAP. We plan to start reviewing applications by August 1st. Here is the application portal with a complete job description. Join us at New York University this fall!

We also have exciting news that our former visiting Postdoc, Clara Pretus has joined Universitat Autònoma de Barcelona—which is one of the top ranked universities in Spain—as an Assistant Professor of Methods in Behavioral Sciences! She is officially launching the Social Brain Lab. You can visit her new lab in Barcelona and stay tuned for the latest news and open positions

Finally, the New York University Department of Psychology is hiring is hiring three new tenure-track professors! One is in SOCIAL PSYCHOLOGY, another is in HIGHER COGNITION, and a third is in SOCIAL COGNITIVE SCIENCE for a person who straddles these two areas. The deadline is September 1. Please apply at the links below & share with anyone who might be interests!

Social psychology: https://apply.interfolio.com/128189

Higher cognition: https://apply.interfolio.com/128188

Social cognitive science: https://apply.interfolio.com/128191

As always, if you have any photos, news, or research you’d like to have included in this newsletter, please reach out to the Lab Manager (nyu.vanbavel.lab@gmail.com) who writes our monthly newsletter. We encourage former lab members and collaborators to share exciting career updates or job opportunities—we’d love to hear what you’re up to and help sustain a flourishing lab community. Please also drop comments below about anything you like about the newsletter or would like us to add.

That’s all, folks—thanks for reading and we’ll see you next month!

I too am working on series of studies on 'Ways to Combat Fake News'. Started with several FGDs to get idea about participants' understanding on Fake News and its various Dimensions. We too came across similar insights about the role of algorithms how they subjugate one to consume a kind of content, incentives, and especially what you have pointed out on getting post viral by posting negative about opposition. We still are on putting these observations for experiments. Your insights are of great utility for conceptualizing observations in better way. Thank you so much share the same.