How to motivate people to share more accurate news

Our new papers on motivations behind being discerning towards misinformation, how partisanship influences misinformation sharing and resistance to fact-checking, morality in the pandemic and more

Why do people believe in and share misinformation? Do people simply not know the facts? Or does the incentive structure of the partisan news media environment and social media drive people to believe in and share misinformation?

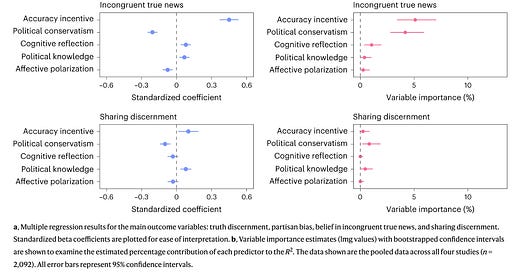

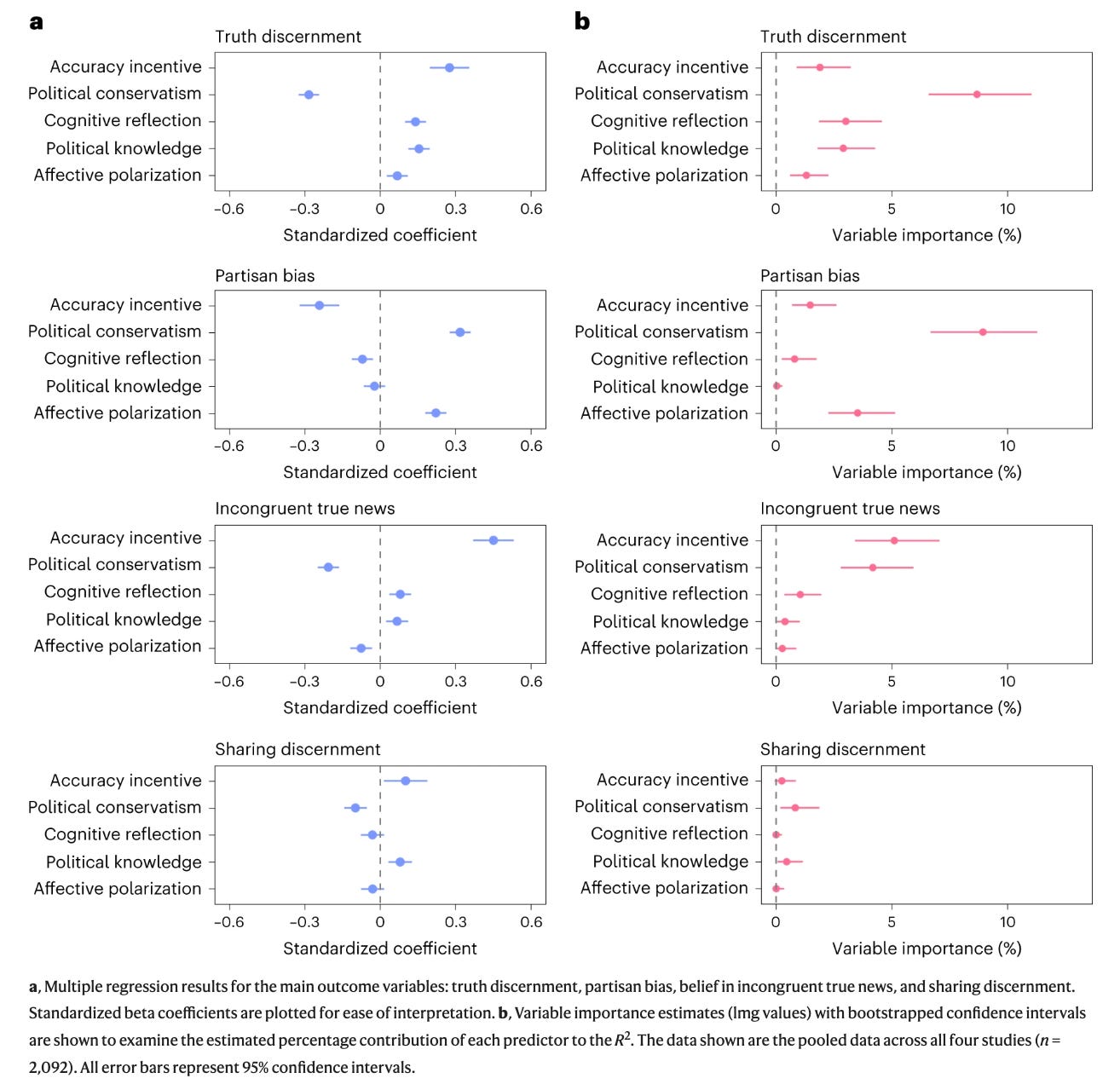

In a new paper by Steve Rathje, Jon Roozenbeek, Jay Van Bavel, and Sander van der Linden, published in the journal Nature Human Behaviour, we conducted a series of studies to help address these questions. Across four experiments with over 3,300 people, we motivated half of participants to be accurate by giving them a modest financial incentive of up to $1 if they correctly pointed out true and false news headlines. In other words, we paid people to correctly spot misinformation.

We found that even very small financial incentives made people 31% better at discerning true from false headlines. This suggests that people’s inability to correctly discern between true and false information does not come solely from a lack of knowledge – instead, a big part is simply a lack of motivation to be accurate.

While Republicans and Democrats are often divided about what type of news is true and false, we found that paying people to be accurate reduced the partisan divide in belief in news by about 30%. This suggests that division between partisans might not be as entrenched as it appears. Democrats and Republicans can come to more of an agreement on a shared set of beliefs if they have incentives to hold accurate beliefs.

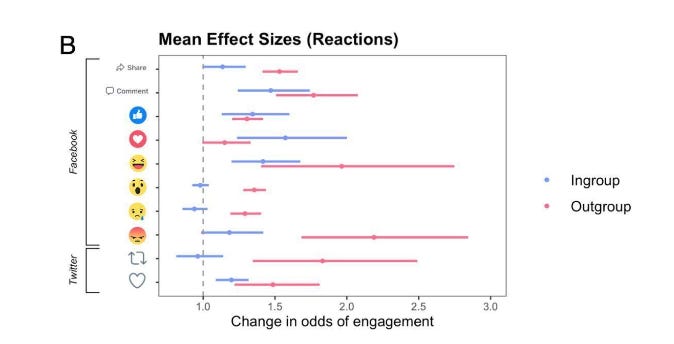

Unfortunately, the incentives to share content that appeals to one’s audience may interfere with people’s motivation to be accurate. In one of our experiments, we gave half of the participants financial incentives to identify news headlines that would be liked by members of their own political party. This was meant to mirror the social media environment, where people are incentivized to share content that is liked by their friends and followers.

This social incentive made people less accurate at identifying misinformation. It also made people report greater intentions to share news stories that aligned with their political identity. In other words, trying to identify content that will reap social rewards – which is what social media encourages us to do – interferes with our motivation to be accurate.

Incentives improved accuracy for both Democrats and Republicans, but had a stronger effect for Republicans. In fact, while a number of prior studies show that Republicans tend to believe in and share more misinformation, this gap in accuracy between Democrats and Republicans closed considerably when Republicans were motivated to be accurate. This means that Republicans’ tendency to believe in less factual information than liberals is not simply due to knowledge gaps or personality differences – a large part of it seems to be motivational.

Money is not the only effective way to make people care more about the truth. We found that emphasizing the reputational benefits of being accurate, telling people that sharing accurate content is a social norm, and telling people they would receive feedback about how accurate they are increased the perceived accuracy of true news from the opposing party.

While it may not be possible to pay everyone on the internet to share more accurate information, we can change aspects of the social media platform design or the broader incentive structure of the partisan media environment to motivate people to be accurate at scale.

Misinformation can be profitable: research shows that false rumors and posts disparaging political opponents have vast potential to go "viral" on social media. Our paper suggests that we need to re-think the current incentive structure to reward people for caring about the truth – as opposed to rewarding them for creating content that affirms the identity of their audience.

Check out a Twitter thread about the paper here and view a TikTok discussing it here.

New Papers and Preprint

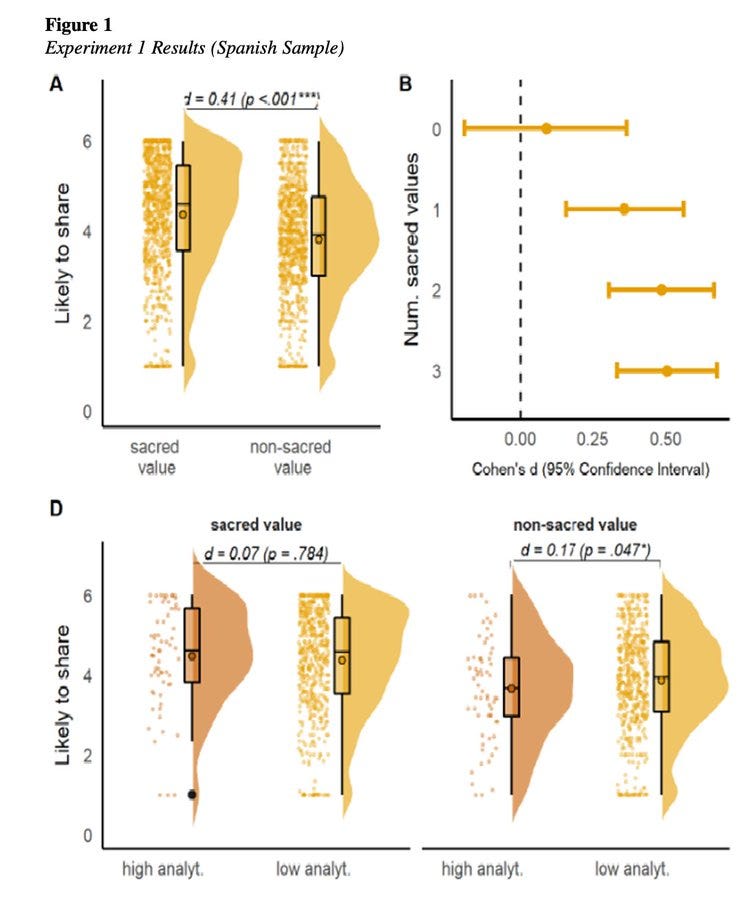

On the topic of combating the spread of misinformation, we have another new paper out this month by Clara Pretus, Camila Servin-Barthet, Elizabeth Harris, William Brady, Oscar Vilarroya and Jay in the Journal of Experimental Psychology: General. They conducted a cross-cultural experiment with conservatives and far-right partisans in the Unites States and Spain (N = 1,609) and a neuroimaging study with far-right partisans in Spain (N = 36) to understand the misinformation sharing among the far-right. Their results suggest that the two components of political devotion—identity fusion and sacred values—play a key role in misinformation sharing, with far-right partisans more likely to share misinformation and unresponsive to fact-checking and accuracy nudges. Read the paper here for more details:

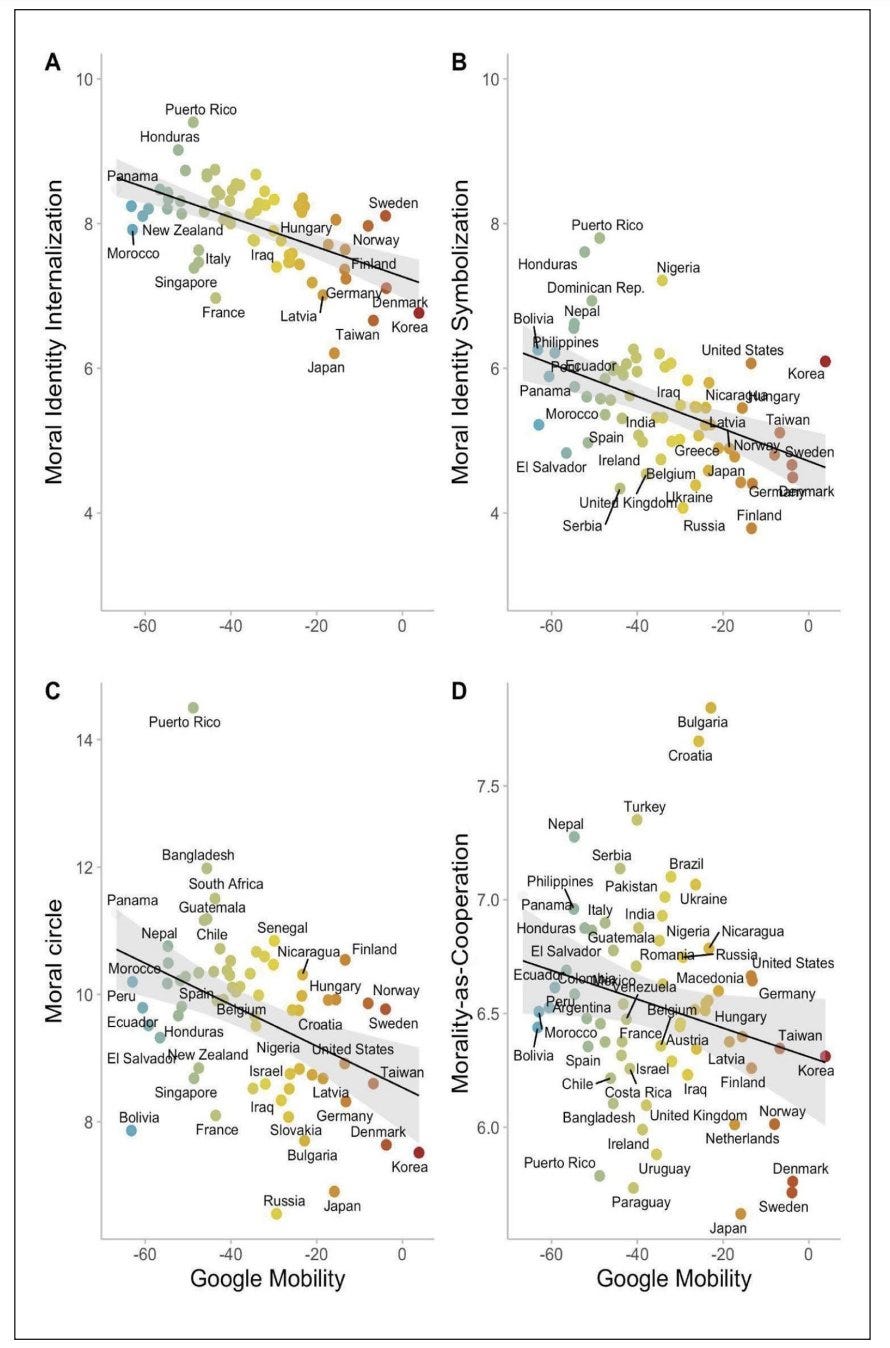

This month, we have another paper published by Jay and a group of collaborators (led by Paulo Boggio) on morality in the Pandemic in Group Process & Intergroup Relations. They conducted a large scale global study (68 countries; N = 46,457) and analyzed google mobility data (42 countries) on what factors influence people's attitudes and behavior towards public policies aimed at preventing the spread of pandemics. Their results suggested that morality is associated with both support for public health policies and actual behavior during the pandemic. Specifically, the study reveals that people with a strong moral identity, cooperation-oriented morality, and wider moral circles were more likely to engage in public health behaviors and support related policies. Read the paper here:

We also have a new preprint by Jay, Claire Robertson, Kareena del Rosario, Jesper Rasmussen and Steve Rathje forthcoming in Annual Reviews of Psychology where they explored the relationship between social media and morality. We argue that social media accelerates existing moral dynamics, amplifying outrage, intergroup conflict, and status seeking. It also has the potential to enhance positive aspects of morality, such as social support and collective action. The review highlights current trends and future directions in this field of research. Read the preprint here:

Videos and News

We had a new working paper last month led by Steve Rathje and Dan-Mircea Mirea, exploring the use of GPT, the language model behind ChatGPT, for automated psychological text analysis in multiple languages. We tested GPT’s (3.5 and 4) ability to detect psychological constructs in text across 12 languages, and found that it’s superior to many existing methods of automated text analysis. Steve and Dan recently made a 15 minutes tutorial video about how to analyze text data using the GPT API in R. Watch the Youtube video here to learn more:

If you are interested in the advances of AI and how that influences the field of psychology, make sure to catch this TikTok made by Steve on this topic:

We also have an exciting news from our lab affiliate Clara Gomez Pretus that she was offered the tenure-track Assistant Professor position in Methods in Behavioral Sciences at the Autonomous University in Barcelona. Congratulations, Prof. Pretus!

As always, if you have any photos, news, or research you’d like to have included in this newsletter, please reach out to the Lab Manager (nyu.vanbavel.lab@gmail.com) who writes our monthly newsletter. We encourage former lab members and collaborators to share exciting career updates or job opportunities—we’d love to hear what you’re up to and help sustain a flourishing lab community. Please also drop comments below about anything you like about the newsletter or would like us to add.

That’s all, folks—thanks for reading and we’ll see you next month!