Political devotion drives misinformation sharing

Why extreme partisans spread misinformation sharing and resistance to fact-checking

Did you know that far-right Americans are seven times more likely to share misinformation than moderates? Research suggests that only 0.1% of social media users, mainly among the far-right, are responsible for sharing 80% of fake news.

This is a big problem because online misinformation can have real-world consequences such as political polarization, threats to democracy, and even reduced vaccination rates.

So why are far-right partisans more likely to share misinformation, and which strategies can effectively curb the spread of misinformation among this group?

Some scholars believe that people share fake news and misinformation because they don't take the time to think critically about it. Researchers have found that if you encourage people to focus on accuracy using “accuracy nudges”, they are less likely to share fake news. However, there is a debate about whether this approach really works, especially for people on the far right.

Other researchers have argued that partisanship is a major factor that drives people to share misinformation. This is because individuals tend to believe information that affirms their political identity or ideology. This is particularly relevant for individuals who strongly identify with a group (identity fusion) and hold rigid positions on group issues (sacred values). These highly "devoted" partisans may be motivated to share misinformation to promote their cause, similar to a form of information warfare.

Our research team (Clara Pretus, Camila Servin, Elizabeth Harris, William Brady, Oscar Vilarroya, and Jay Van Bavel) tested whether these two aspects of political devotion—identity fusion and sacred values—are related to the spread of misinformation among the far-right and the efficacy of various interventions to combat it. You can read our pre-print here and the paper will be appearing in the Journal of Experimental Psychology: General in a few months.

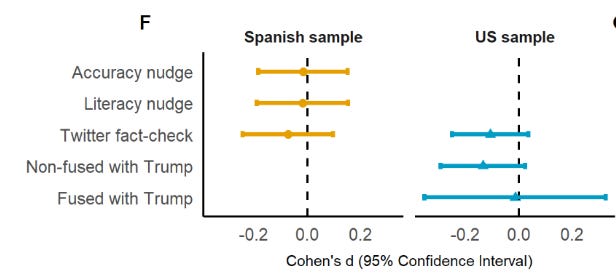

We tested these hypotheses in two experiments with conservatives and far-right partisans in the U.S. and Spain. We asked participants to rate the likelihood of sharing social media posts with misinformation related to sacred values (such as immigration) and non-sacred values (such as infrastructure). Participants were split into three groups and exposed to different interventions: a Twitter fact-check, an accuracy nudge, and a media literacy nudge.

In line with the political devotion hypothesis, far-right partisans in Spain and US Republicans who highly identify with Trump were more likely to share misinformation compared to other Republicans and center-right voters, especially if the misinformation was related to sacred values (such as immigration).

Importantly, fact-checking or accuracy nudges were ineffective for these populations (with no effect compared to control trials). Devoted partisans shared just as much misinformation when they were fact checked—or not! This suggests that people's strong political beliefs can sometimes overpower their desire for accuracy.

We also ran a neuroimaging study with far-right partisans in Spain to identify which brain networks are involved in processing misinformation related to sacred values. We found that misinformation related to sacred values triggered a strong neural response in several brain regions involved in norm compliance and theory of mind, which is the ability to understand other people's beliefs and intentions. The results suggest that people may share messages relevant to sacred values in an attempt to conform to their group.

Overall, our findings provide evidence that political devotion and group conformity are significant factors driving misinformation sharing and resistance to fact-checks and accuracy nudges.

Our study highlights the need to address social identity and group processes when trying to decrease the spread of misinformation among far-right partisans, who share a disproportionate amount of misleading content. We also hope to study the same issues with far left voters in the future.

New Papers

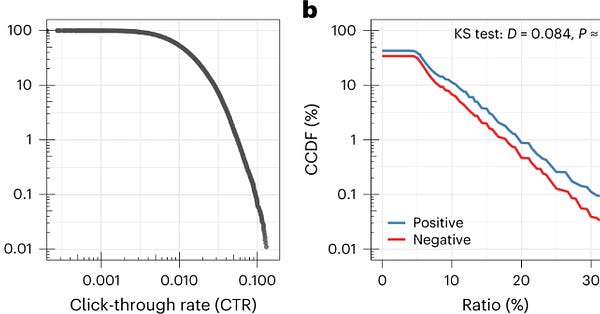

In our new paper published in Nature Human Behaviour, Claire Robertson, Nicolas Pröllochs, Kaoru Schwarzenegger, Philip Pärnamets, Jay and Stefan Feuerriegel analyzed the impact of negative and emotional words on online news consumption using a dataset of viral news stories. The study found that headlines with negative words increased consumption rates while positive words decreased them. Each additional negative word in an average length headline increased click-through rates by 2.3%. Read the full paper here:

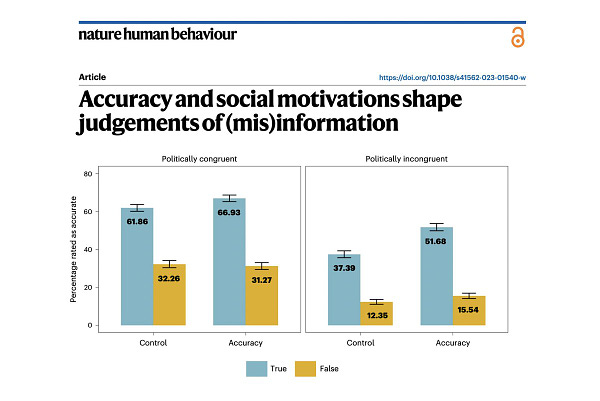

This month, we have another paper published by Steve Rathje, Jon Roozenbeek, Jay and Sander van der Linden. They find that among US participants, financial incentives for accurate responses about true and false political news headlines improved accuracy and reduced bias by 30%, mainly by increasing perceived accuracy of opposing party's true news. However, incentivizing people to identify news liked by their political allies decreased accuracy. Motivational factors play a significant role in people's judgments of news accuracy. Read the full paper here or watch Steve’s TikTok video for more on this study:

Do beliefs predict behaviors? The answer is often yes for both political and nonpolitical issues. Taking a step further, in our recent paper, Madalina Vlasceanu, Casey McMahon, Jay and Alin Coman discussed if beliefs change predict behaviors change. It’s more complicated for political issues. The study found that belief change triggered behavioral change only for Democrats on Democratic topics, but not for Democrats on Republican topics or for Republicans on either topic. Read the paper here to learn more.

Podcast and Outreach

Jay was interviewed by The Passion Struck Podcast recently. He uncovered the secret to becoming the architect of your own identity. The full episode is available on Youtube here:

Jay and his co-author of the book, Dominic Packer just launched their The Power of Us podcast. The first episode about The roots of polarization went on air recently. Listen and follow them on Spotify or on Apple Podcasts.

After publishing her work about negativity and online news consumption, Claire talked about the behind scene story of this study where potential conflicts were turned into collaboration. Check it out here:

News and Photos of the month

Congratulations to our former visiting scholar, Clara Pretus on landing a new job in the Behavioral Insights Academy at the United Nations Office for Counter-Terrorism, where she will provide training and mentoring to practitioners, UN program managers, civil society organizations, and member state government officials in charge of Counter Terrorism programs.

To celebrate Jay’s birthday, the lab gathered and baked a cake together:

Happy birthday Jay!

The lab also took our new lab photo together during lab meeting last week!

As always, if you have any photos, news, or research you’d like to have included in this newsletter, please reach out to the Lab Manager (nyu.vanbavel.lab@gmail.com) who writes our monthly newsletter. We encourage former lab members and collaborators to share exciting career updates or job opportunities—we’d love to hear what you’re up to and help sustain a flourishing lab community. Please also drop comments below about anything you like about the newsletter or would like us to add.

That’s all, folks—thanks for reading and we’ll see you next month!