Crowdsourced accuracy judgments can reduce the spread of misinformation

Our latest paper finds that crowdsourced accuracy judgments can act like a healthy social norm--leading people to reduce the spread of misinformation by 25%.

Ninety-five percent of Americans identified misinformation as a problem when they’re trying to access important information!

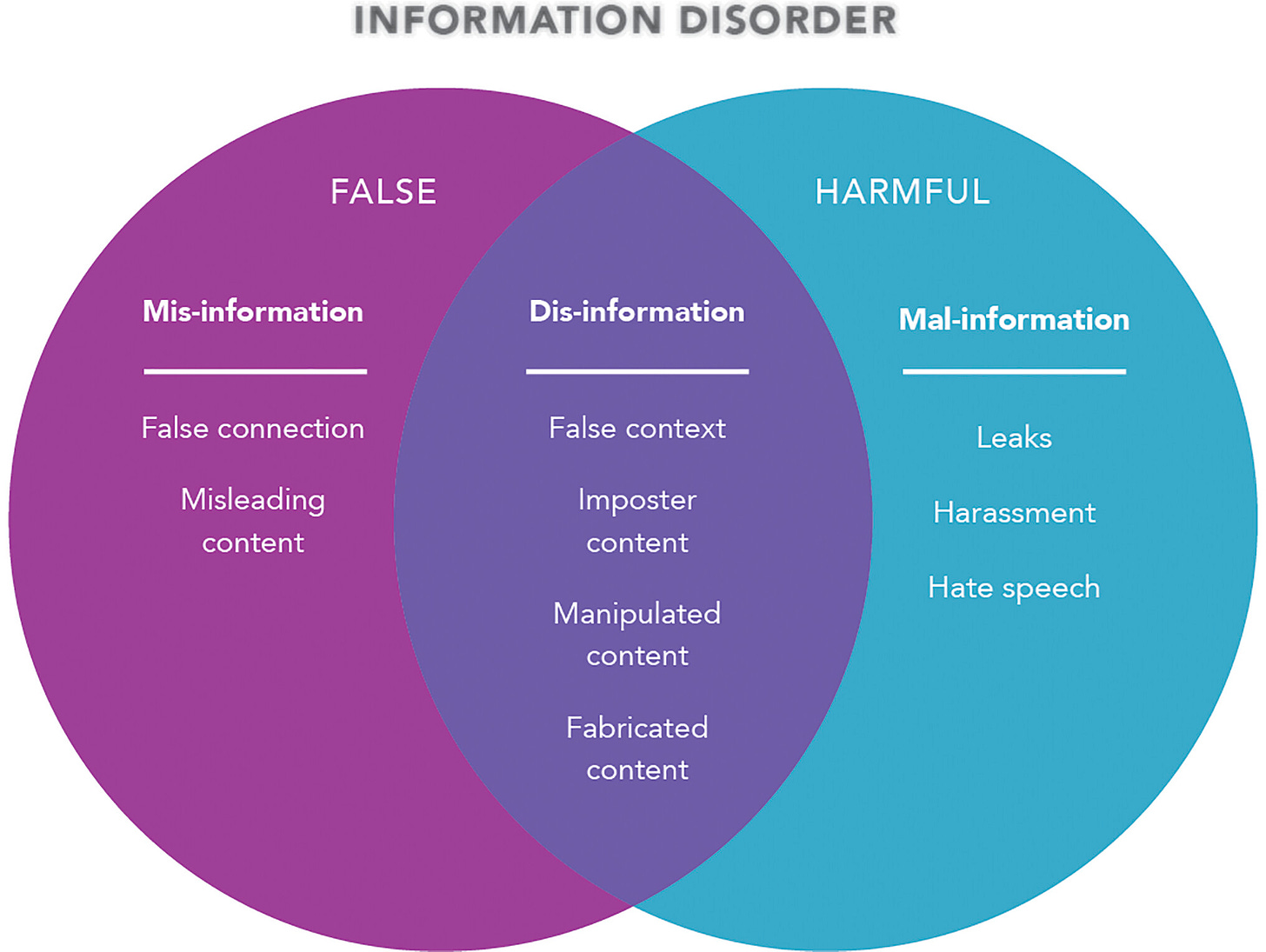

Unfortunately, social media giants have struggled to stem the tide of falsehoods and conspiracy theories around the globe. The existing content moderation model often falls short—failing to correct misinformation until it has already gone viral. To combat this problem, we need systemic changes in social media infrastructure that can effectively thwart misinformation.

A popular moderation-free approach to tackle misinformation is "accuracy nudging" — using cues to prompt users to be accurate. This strategy is based on the assumption that sharing misinformation stems from inattention or lack of critical thinking. However, our recent meta-analysis (led by Steve Rathje) found relatively weak effects of these nudges, particularly among the far-right supporters who are frequently linked to the spread of misinformation. Hence, there is a pressing need for impactful, scalable strategies to minimize political misinformation that are effective across the political spectrum—especially among the groups who are most likely to spread it.

In a new set of experiments in the US and the UK, we (Clara Pretus, Ali Javeed, Diána Hughes, Kobi Hackenburg, Manos Tsakiris, Oscar Vilarroya, and Jay Van Bavel) developed and tested an identity-based intervention: the Misleading count. This approach leveraged the fact that misinformation is usually embedded in a social environment with visible social engagement metrics. We simply added a Misleading Count button next to the Like count which reported the number of people who tagged a social media post as misleading.

This intervention was designed to reveal a simple social norm—that people like you found the post misleading. We found that the Misleading Count reduced people’s likelihood of sharing misinformation by 25%. Moreover, it was especially effective when these judgments came from in-group members.

We conducted three online experiments involving Democrats and Republicans in the U.S. and Labor and Conservative voters in the UK. Participants were presented with posts by in-group political leaders that contained inaccurate information. We tested the Misleading count in two versions: one where it represented accuracy judgments from the in-group (e.g., fellow Democrats) and another where it represented accuracy judgments from strangers. We also compared its effectiveness to established methods such as the accuracy nudge and the standard Twitter fact check.

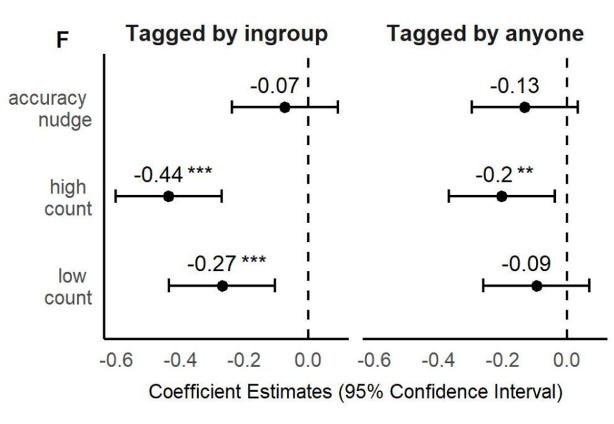

We found that liberals and conservatives in the US and the UK were 25% less likely to share partisan misinformation in response to the Misleading Count, as compared to 5% less likely in response to the accuracy nudge. Crowdsourced accuracy judgments were more effective when they came from the in-group (e.g., fellow Republicans) compared to strangers (ass seen in the figure below):

The increased effectiveness of in-group accuracy judgments over generic ones was driven by US respondents, who were more politically polarized than UK respondents. This suggests that partisans are more attuned to group norms in polarized contexts.

Remarkably, the intervention was just as effective for extreme partisans (as gauged by high identification with a political party or its leaders). In addition, our intervention was more effective in reducing misinformation sharing on polarizing issues (e.g., immigration) compared to non-polarizing issues (e.g., infrastructure). This is an important aspect of our intervention since polarizing content is more likely to be shared than non-polarizing content. Group norms seem to be most effective when the issues at stake have a high social signaling value!

In sum, our results provide evidence that identity-based interventions can be more effective than identity-neutral interventions in reducing misinformation sharing. This could be due to people's tendency to align with group norms, especially during decisions that have a high social signaling value. Moreover, these interventions could be easily crowdsourced by simply asking people if they find content misleading.

One question is whether people blindly align with group norms, or whether exposure to group norms that conflict with one's expectations provides an opportunity for people to deliberate and be more accurate. But this is at least a first step towards an intervention that might be scalable and effective across the political spectrum for polarized issues.

Our paper will be published in a forthcoming issue of Philosophical Transactions of the Royal Society B. To read a free pre-print of paper: https://psyarxiv.com/7j26y/

New Papers and Preprints

We have a new paper published this month in the International Journal of Communication that described a new framework we created to understand how Selection, Platform Design, Incentives, and Real-World Context (we call it the SPIR framework) might explain social media’s role in exacerbating polarization and intergroup conflict. It was led by Elizabeth Harris, Steve Rathje, and Claire Robertson.

Rather than simply asking whether social media as a whole causes polarization, our paper examines how each of these processes can spur polarization in certain contexts. Our paper explains how these features of social media can act as an accelerant, amplifying divisions in society between social groups and spilling over into offline behavior. We discuss how interventions might target each of these factors to mitigate (or enhance) polarization. We wrote a summary of the paper in our newsletter last month if you want to learn more.

We also had a brief commentary published in Brain and Behavioral Sciences explaining how individual-level solutions may support system-level change if they are internalized as part of one's social identity (this was led by Lina Koppel, Claire Robertson, Kim Doell, Ali Javeed, Jesper Rasmussen, Steve Rathje, and Madalina Vlasceanu). We agree that system-level change is crucial for solving society's most pressing problems. However, individual-level interventions may be useful for creating behavioral change before system-level change is in place and for increasing necessary public support for system-level solutions.

Participating in individual-level solutions may increase support for system-level solutions—especially if the individual-level solutions are internalized as part of one's social identity. As such, the dichotomy between individual and system level change may be a false one. It is people who drive system-level change and finding a way to get them committed to change may ultimately be the bigger problem. Again, we wrote a preview of this paper in an earlier newsletter if you want to read more.

Videos and News

Our research on political extremists (led by Clara Pretus) was published in Forbes Magazine this month where they wrote:

“Understanding the emotional and societal factors behind misinformation spread is key. The issue isn’t just about debunking false information — it also involves addressing extremists’ need for social belonging and identity affirmation. In a world divided by “my truth” and “your truth,” striving for critical media literacy and open dialogue can build bridges and potentially free us from the prison of our beliefs.”

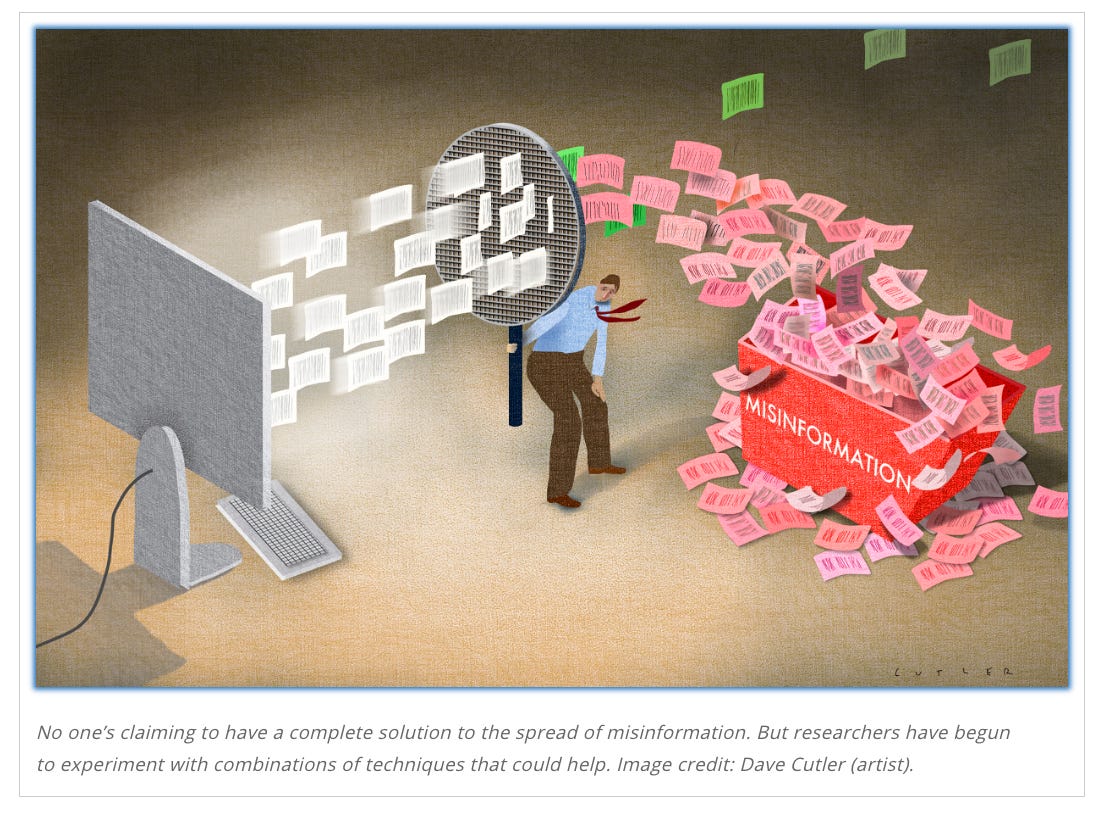

Thankfully we have been working on a more effective antidote to misinformation! The research we described at the beginning of this newsletter was featured in a news article in the Proceedings on the National Academy of Science on “How to mitigate misinformation”.

As the column notes “A toxic mix of confusion, misdirection, manipulation, and outright lies now threatens both public health and our collective faith in democratic elections—not to mention any common understanding of truth.” This deep dive on misinformation in PNAS offers a nice review of the problem and some of the solutions that scientists have been working on for the past few years.

Last month we posted a job for a Lab Manager and we are excited to announce that Sarah Mughal will be joining us the joint Lab Manager of both the Social Identity and Morality lab and the Collective Cognition Lab at NYU. She currently works at Brown University, but received her BA and MA in Psychology at NYU, so she often finds herself accidentally walking into Bobst library instead of lab if she hasn’t had her coffee yet. Her research interests include the psychology of social media, discrimination and bias in AI/ML methods, and how psychiatric disorders like ADHD or anxiety affect social interactions and experiences. Outside of lab, Sarah can be found writing in museums, marathoning video games, or meticulously crocheting tiny socks for plushies. Here she is as a wee little scientist in training!

We are also excited to announce that former PhD student in the lab, Diego Reinero has accepted a position at the University of Pennsylvania as a MindCORE Postdoctoral Fellow starting this Sept! Here is Diego front and center at our lab alumni conference at NYU Florence earlier this summer holding the group together, as usual. You can now catch him in Philly where he’ll continue studying how people connect socially and make moral judgments.

In the next few newsletters we will be featuring some new grants and projects we are launching at NYU and introducing you to two new postdocs who will be joining our lab in the next year. So stay tuned!

As always, if you have any photos, news, or research you’d like to have included in this newsletter, please reach out to the Lab Manager (nyu.vanbavel.lab@gmail.com) who writes our monthly newsletter. We encourage former lab members and collaborators to share exciting career updates or job opportunities—we’d love to hear what you’re up to and help sustain a flourishing lab community. Please also drop comments below about anything you like about the newsletter or would like us to add.

That’s all, folks—thanks for reading and we’ll see you next month!